skrub.GapEncoder#

Usage examples at the bottom of this page.

- class skrub.GapEncoder(*, n_components=10, batch_size=1024, gamma_shape_prior=1.1, gamma_scale_prior=1.0, rho=0.95, rescale_rho=False, hashing=False, hashing_n_features=4096, init='k-means++', max_iter=5, ngram_range=(2, 4), analyzer='char', add_words=False, random_state=None, rescale_W=True, max_iter_e_step=1, max_no_improvement=5, handle_missing='zero_impute', n_jobs=None, verbose=0)[source]#

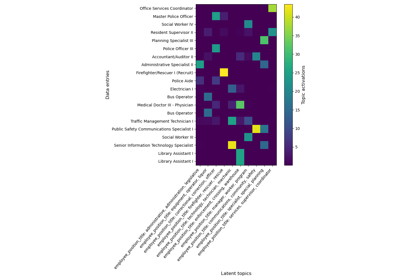

Constructs latent topics with continuous encoding.

This encoder can be understood as a continuous encoding on a set of latent categories estimated from the data. The latent categories are built by capturing combinations of substrings that frequently co-occur.

The GapEncoder supports online learning on batches of data for scalability through the GapEncoder.partial_fit method.

The principle is as follows:

Given an input string array X, we build its bag-of-n-grams representation V (n_samples, vocab_size).

Instead of using the n-grams counts as encodings, we look for low- dimensional representations by modeling n-grams counts as linear combinations of topics

V = HW, with W (n_topics, vocab_size) the topics and H (n_samples, n_topics) the associated activations.Assuming that n-grams counts follow a Poisson law, we fit H and W to maximize the likelihood of the data, with a Gamma prior for the activations H to induce sparsity.

In practice, this is equivalent to a non-negative matrix factorization with the Kullback-Leibler divergence as loss, and a Gamma prior on H. We thus optimize H and W with the multiplicative update method.

“Gap” stands for “Gamma-Poisson”, the families of distributions that are used to model the importance of topics in a document (Gamma), and the term frequencies in a document (Poisson).

- Parameters:

- n_components

int, optional, default=10 Number of latent categories used to model string data.

- batch_size

int, optional, default=1024 Number of samples per batch.

- gamma_shape_prior

float, optional, default=1.1 Shape parameter for the Gamma prior distribution.

- gamma_scale_prior

float, optional, default=1.0 Scale parameter for the Gamma prior distribution.

- rho

float, optional, default=0.95 Weight parameter for the update of the W matrix.

- rescale_rho

bool, optional, default=False If True, use

rho ** (batch_size / len(X))instead of rho to obtain an update rate per iteration that is independent of the batch size.- hashing

bool, optional, default=False If True, HashingVectorizer is used instead of CountVectorizer. It has the advantage of being very low memory, scalable to large datasets as there is no need to store a vocabulary dictionary in memory.

- hashing_n_features

int, default=2**12 Number of features for the HashingVectorizer. Only relevant if hashing=True.

- init{‘k-means++’, ‘random’, ‘k-means’}, default=’k-means++’

Initialization method of the W matrix. If init=’k-means++’, we use the init method of KMeans. If init=’random’, topics are initialized with a Gamma distribution. If init=’k-means’, topics are initialized with a KMeans on the n-grams counts.

- max_iter

int, default=5 Maximum number of iterations on the input data.

- ngram_range

int2-tuple, default=(2, 4) - The lower and upper boundaries of the range of n-values for different

n-grams used in the string similarity. All values of n such that

min_n <= n <= max_nwill be used.

- analyzer{‘word’, ‘char’, ‘char_wb’}, default=’char’

Analyzer parameter for the HashingVectorizer / CountVectorizer. Describes whether the matrix V to factorize should be made of word counts or character-level n-gram counts. Option ‘char_wb’ creates character n-grams only from text inside word boundaries; n-grams at the edges of words are padded with space.

- add_words

bool, default=False If True, add the words counts to the bag-of-n-grams representation of the input data.

- random_state

intorRandomState, optional Random number generator seed for reproducible output across multiple function calls.

- rescale_W

bool, default=True If True, the weight matrix W is rescaled at each iteration to have a l1 norm equal to 1 for each row.

- max_iter_e_step

int, default=1 Maximum number of iterations to adjust the activations h at each step.

- max_no_improvement

int, default=5 Control early stopping based on the consecutive number of mini batches that do not yield an improvement on the smoothed cost function. To disable early stopping and run the process fully, set

max_no_improvement=None.- handle_missing{‘error’, ‘empty_impute’}, default=’empty_impute’

Whether to raise an error or impute with empty string (‘’) if missing values (NaN) are present during GapEncoder.fit (default is to impute). In GapEncoder.inverse_transform, the missing categories will be denoted as None. “Missing values” are any value for which

pandas.isnareturnsTrue, such asnumpy.nanorNone.- n_jobs

int, optional The number of jobs to run in parallel. The process is parallelized column-wise, meaning each column is fitted in parallel. Thus, having n_jobs > X.shape[1] will not speed up the computation.

- verbose

int, default=0 Verbosity level. The higher, the more granular the logging.

- n_components

See also

MinHashEncoderEncode string columns as a numeric array with the minhash method.

SimilarityEncoderEncode string columns as a numeric array with n-gram string similarity.

deduplicateDeduplicate data by hierarchically clustering similar strings.

References

For a detailed description of the method, see Encoding high-cardinality string categorical variables by Cerda, Varoquaux (2019).

Examples

>>> enc = GapEncoder(n_components=2, random_state=0)

Let’s encode the following non-normalized data:

>>> X = [['paris, FR'], ['Paris'], ['London, UK'], ['Paris, France'], ... ['london'], ['London, England'], ['London'], ['Pqris']]

>>> enc.fit(X) GapEncoder(n_components=2, random_state=0)

The GapEncoder has found the following two topics:

>>> enc.get_feature_names_out() array(['england, london, uk', 'france, paris, pqris'], dtype=object)

It got it right, reccuring topics are “London” and “England” on the one side and “Paris” and “France” on the other.

As this is a continuous encoding, we can look at the level of activation of each topic for each category:

>>> enc.transform(X) array([[ 0.051..., 10.548...], [ 0.050..., 4.549...], [12.046..., 0.053...], [ 0.052..., 16.547...], [ 6.049..., 0.050...], [19.545..., 0.054...], [ 6.049..., 0.050...], [ 0.060..., 4.539...]])

The higher the value, the bigger the correspondence with the topic.

- Attributes:

Methods

fit(X[, y])Fit the instance on X.

fit_transform(X[, y])Fit to data, then transform it.

get_feature_names_out([col_names, n_labels, ...])Return the labels that best summarize the learned components/topics.

Get metadata routing of this object.

get_params([deep])Get parameters for this estimator.

partial_fit(X[, y])Partial fit this instance on X.

score(X[, y])Score this instance on X.

set_output(*[, transform])Set output container.

set_params(**params)Set the parameters of this estimator.

transform(X)Return the encoded vectors (activations) H of input strings in X.

- fit(X, y=None)[source]#

Fit the instance on X.

- Parameters:

- Xarray_like, shape (n_samples, n_features)

The string data to fit the model on.

- y

None Unused, only here for compatibility.

- Returns:

GapEncoderThe fitted GapEncoder instance (self).

- fit_transform(X, y=None, **fit_params)[source]#

Fit to data, then transform it.

Fits transformer to X and y with optional parameters fit_params and returns a transformed version of X.

- Parameters:

- Xarray_like of shape (n_samples, n_features)

Input samples.

- yarray_like of shape (n_samples,) or (n_samples, n_outputs), default=None

Target values (None for unsupervised transformations).

- **fit_params

dict Additional fit parameters.

- Returns:

- get_feature_names_out(col_names=None, n_labels=3, input_features=None)[source]#

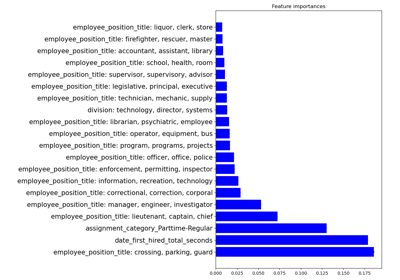

Return the labels that best summarize the learned components/topics.

For each topic, labels with the highest activations are selected.

- Parameters:

- col_names‘auto’ or

listofstr, optional The column names to be added as prefixes before the labels. If col_names=None, no prefixes are used. If col_names=’auto’, column names are automatically defined:

if the input data was a

DataFrame, its column names are used,otherwise, ‘col1’, …, ‘colN’ are used as prefixes.

Prefixes can be manually set by passing a list for col_names.

- n_labels

int, default=3 The number of labels used to describe each topic.

- input_features

None Unused, only here for compatibility.

- col_names‘auto’ or

- Returns:

- get_metadata_routing()[source]#

Get metadata routing of this object.

Please check User Guide on how the routing mechanism works.

- Returns:

- routingMetadataRequest

A

MetadataRequestencapsulating routing information.

- partial_fit(X, y=None)[source]#

Partial fit this instance on X.

To be used in an online learning procedure where batches of data are coming one by one.

- Parameters:

- Xarray_like, shape (n_samples, n_features)

The string data to fit the model on.

- y

None Unused, only here for compatibility.

- Returns:

GapEncoderThe fitted GapEncoder instance (self).

- score(X, y=None)[source]#

Score this instance on X.

Returns the sum over the columns of X of the Kullback-Leibler divergence between the n-grams counts matrix V of X, and its non-negative factorization HW.

- Parameters:

- Xarray_like, shape (n_samples, n_features)

The data to encode.

- yIgnored

Not used, present for API consistency by convention.

- Returns:

floatThe Kullback-Leibler divergence.

- set_output(*, transform=None)[source]#

Set output container.

See Introducing the set_output API for an example on how to use the API.

- Parameters:

- transform{“default”, “pandas”}, default=None

Configure output of transform and fit_transform.

“default”: Default output format of a transformer

“pandas”: DataFrame output

None: Transform configuration is unchanged

- Returns:

- selfestimator instance

Estimator instance.

- set_params(**params)[source]#

Set the parameters of this estimator.

The method works on simple estimators as well as on nested objects (such as

Pipeline). The latter have parameters of the form<component>__<parameter>so that it’s possible to update each component of a nested object.- Parameters:

- **params

dict Estimator parameters.

- **params

- Returns:

- selfestimator instance

Estimator instance.

- transform(X)[source]#

Return the encoded vectors (activations) H of input strings in X.

Given the learnt topics W, the activations H are tuned to fit

V = HW. When X has several columns, they are encoded separately and then concatenated.Remark: calling transform multiple times in a row on the same input X can give slightly different encodings. This is expected due to a caching mechanism to speed things up.

- Parameters:

- Xarray_like, shape (n_samples, n_features)

The string data to encode.

- Returns:

ndarray, shape (n_samples, n_topics * n_features)Transformed input.

Examples using skrub.GapEncoder#

Encoding: from a dataframe to a numerical matrix for machine learning