ToDatetime#

- class skrub.ToDatetime(format=None)[source]#

Parse datetimes represented as strings and return

Datetimecolumns.Note

ToDatetimeis a type of single-column transformer. Unlike most scikit-learn estimators, itsfit,transformandfit_transformmethods expect a single column (a pandas or polars Series) rather than a full dataframe. To apply this transformer to one or more columns in a dataframe, use it as a parameter in askrub.TableVectorizerorsklearn.compose.ColumnTransformer. In theColumnTransformer, pass a single column:make_column_transformer((ToDatetime(), 'col_name_1'), (ToDatetime(), 'col_name_2'))instead ofmake_column_transformer((ToDatetime(), ['col_name_1', 'col_name_2'])).This transformer tries to convert the given column from string to datetime, by either testing common datetime formats or using the

formatspecified by the user. Columns that are not strings, dates or datetimes raise an exception.- Parameters:

- format

strorNone, optional, default=None Format to use for parsing dates that are stored as strings, e.g.

"%Y-%m-%dT%H:%M%S". If not specified, the format is inferred from the data when possible. When doing so, for dates presented as 01/02/2003, it is usually possible to infer from the data whether the month comes first (USA convention) or the day comes first, ie"%m/%d/%Y"vs"%d/%m/%Y". In the odd chance that all the sampled dates land before the 13th day of the month and that both conventions are plausible, the USA convention (month first) is chosen.

- format

- Attributes:

- format_

strorNone Detected format. If the transformer was fitted on a column that already had a Datetime dtype, the

format_is None. Otherwise it is the format that was detected when parsing the string column. If the parameterformatwas provided, it is the only one that the transformer attempts to use so in that casetformat_is eitherNoneor equal toformat.- output_dtype_data type

The output dtype, which includes information about the time resolution and time zone.

- output_time_zone_

strorNone The time zone of the transformed column. If the output is time zone naive it is

None; otherwise it is the name of the time zone such asUTCorEurope/Paris.

- format_

Notes

An input column is converted to a column with dtype Datetime if possible, and rejected by raising a

RejectColumnexception otherwise. Only Date, Datetime, String, and pandas object columns are handled, other dtypes are rejected withRejectColumn.Once a column is accepted, outputs of

transformalways have the same Datetime dtype (including resolution and time zone). Once the transformer is fitted, entries that fail to be converted during subsequent calls totransformare replaced with nulls.Examples

>>> import pandas as pd

>>> s = pd.Series(["2024-05-05T13:17:52", None, "2024-05-07T13:17:52"], name="when") >>> s 0 2024-05-05T13:17:52 1 None 2 2024-05-07T13:17:52 Name: when, dtype: object

>>> from skrub import ToDatetime

>>> to_dt = ToDatetime() >>> to_dt.fit_transform(s) 0 2024-05-05 13:17:52 1 NaT 2 2024-05-07 13:17:52 Name: when, dtype: datetime64[...]

The attributes

format_,output_dtype_,output_time_zone_record information about the conversion result.>>> to_dt.format_ '%Y-%m-%dT%H:%M:%S' >>> to_dt.output_dtype_ dtype('<M8[...]') >>> to_dt.output_time_zone_ is None True

If we provide the datetime format, it is used and columns that do not conform to it are rejected.

>>> ToDatetime(format="%Y-%m-%dT%H:%M:%S").fit_transform(s) 0 2024-05-05 13:17:52 1 NaT 2 2024-05-07 13:17:52 Name: when, dtype: datetime64[...]

>>> ToDatetime(format="%d/%m/%Y").fit_transform(s) Traceback (most recent call last): ... skrub._apply_to_cols.RejectColumn: Failed to convert column 'when' to datetimes using the format '%d/%m/%Y'.

Columns that already have

Datetimedtypeare not modified (but they are accepted); for those columns the provided format, if any, is ignored.>>> s = pd.to_datetime(s).dt.tz_localize("Europe/Paris") >>> s 0 2024-05-05 13:17:52+02:00 1 NaT 2 2024-05-07 13:17:52+02:00 Name: when, dtype: datetime64[..., Europe/Paris] >>> to_dt.fit_transform(s) is s True

In that case the

format_isNone.>>> to_dt.format_ is None True >>> to_dt.output_dtype_ datetime64[..., Europe/Paris] >>> to_dt.output_time_zone_ 'Europe/Paris'

Columns that have a different

dtypethan strings, pandas objects, or datetimes are rejected.>>> s = pd.Series([2020, 2021, 2022], name="year") >>> to_dt.fit_transform(s) Traceback (most recent call last): ... skrub._apply_to_cols.RejectColumn: Column 'year' does not contain strings.

String columns that do not appear to contain datetimes or for some other reason fail to be converted are also rejected.

>>> s = pd.Series(["2024-05-07T13:36:27", "yesterday"], name="when") >>> to_dt.fit_transform(s) Traceback (most recent call last): ... skrub._apply_to_cols.RejectColumn: Could not find a datetime format for column 'when'.

Once

ToDatetimewas successfully fitted,transformwill always try to parse datetimes with the same format and output the samedtype. Entries that fail to be converted result in a null value:>>> s = pd.Series(["2024-05-05T13:17:52", None, "2024-05-07T13:17:52"], name="when") >>> to_dt = ToDatetime().fit(s) >>> to_dt.transform(s) 0 2024-05-05 13:17:52 1 NaT 2 2024-05-07 13:17:52 Name: when, dtype: datetime64[...] >>> s = pd.Series(["05/05/2024", None, "07/05/2024"], name="when") >>> to_dt.transform(s) 0 NaT 1 NaT 2 NaT Name: when, dtype: datetime64[...]

Time zones

During

fit, parsing strings that contain fixed offsets results in datetimes in UTC. Mixed offsets are supported and will all be converted to UTC.>>> s = pd.Series(["2020-01-01T04:00:00+02:00", "2020-01-01T04:00:00+03:00"]) >>> to_dt.fit_transform(s) 0 2020-01-01 02:00:00+00:00 1 2020-01-01 01:00:00+00:00 dtype: datetime64[..., UTC] >>> to_dt.format_ '%Y-%m-%dT%H:%M:%S%z' >>> to_dt.output_time_zone_ 'UTC'

Strings with no timezone indication result in naive datetimes:

>>> s = pd.Series(["2020-01-01T04:00:00", "2020-01-01T04:00:00"]) >>> to_dt.fit_transform(s) 0 2020-01-01 04:00:00 1 2020-01-01 04:00:00 dtype: datetime64[...] >>> to_dt.output_time_zone_ is None True

During

transform, outputs are cast to the samedtypethat was found duringfit. This includes the timezone, which is converted if necessary.>>> s_paris = pd.to_datetime( ... pd.Series(["2024-05-07T14:24:49", "2024-05-06T14:24:49"]) ... ).dt.tz_localize("Europe/Paris") >>> s_paris 0 2024-05-07 14:24:49+02:00 1 2024-05-06 14:24:49+02:00 dtype: datetime64[..., Europe/Paris] >>> to_dt = ToDatetime().fit(s_paris) >>> to_dt.output_dtype_ datetime64[..., Europe/Paris]

Here our converter is set to output datetimes with nanosecond resolution, localized in “Europe/Paris”.

We may have a column in a different timezone:

>>> s_london = s_paris.dt.tz_convert("Europe/London") >>> s_london 0 2024-05-07 13:24:49+01:00 1 2024-05-06 13:24:49+01:00 dtype: datetime64[..., Europe/London]

Here the timezone is “Europe/London” and the times are offset by 1 hour. During

transformdatetimes will be converted to the original dtype and the “Europe/Paris” timezone:>>> to_dt.transform(s_london) 0 2024-05-07 14:24:49+02:00 1 2024-05-06 14:24:49+02:00 dtype: datetime64[..., Europe/Paris]

Moreover, we may have to transform a timezone-naive column whereas the transformer was fitted on a timezone-aware column. Note that is somewhat a corner case unlikely to happen in practice if the inputs to

fitandtransformcome from the same dataframe.>>> s_naive = s_paris.dt.tz_convert(None) >>> s_naive 0 2024-05-07 12:24:49 1 2024-05-06 12:24:49 dtype: datetime64[...]

In this case, we make the arbitrary choice to assume that the timezone-naive datetimes are in UTC.

>>> to_dt.transform(s_naive) 0 2024-05-07 14:24:49+02:00 1 2024-05-06 14:24:49+02:00 dtype: datetime64[..., Europe/Paris]

Conversely, a transformer fitted on a timezone-naive column can convert timezone-aware columns. Here also, we assume the naive datetimes were in UTC.

>>> to_dt = ToDatetime().fit(s_naive) >>> to_dt.transform(s_london) 0 2024-05-07 12:24:49 1 2024-05-06 12:24:49 dtype: datetime64[...]

``%d/%m/%Y`` vs ``%m/%d/%Y``

When parsing strings in one of the formats above,

ToDatetimetries to guess if the month comes first (USA convention) or the day (rest of the world) from the data.>>> s = pd.Series(["05/23/2024"]) >>> to_dt.fit_transform(s) 0 2024-05-23 dtype: datetime64[...] >>> to_dt.format_ '%m/%d/%Y'

Here we could infer

'%m/%d/%Y'because there are not 23 months in a year. Similarly,>>> s = pd.Series(["23/05/2024"]) >>> to_dt.fit_transform(s) 0 2024-05-23 dtype: datetime64[...] >>> to_dt.format_ '%d/%m/%Y'

In the case it cannot be inferred, the USA convention is used:

>>> s = pd.Series(["03/05/2024"]) >>> to_dt.fit_transform(s) 0 2024-03-05 dtype: datetime64[...] >>> to_dt.format_ '%m/%d/%Y'

If the days are randomly distributed and the fitting data large enough, it is somewhat unlikely that all days would be below 12 so the inferred format should often be correct. To be sure, one can specify the

formatin the constructor.Methods

fit(column[, y])Fit the transformer.

fit_transform(column[, y])Fit the encoder and transform a column.

get_feature_names_out([input_features])Return a list of features generated by the transformer.

get_params([deep])Get parameters for this estimator.

set_params(**params)Set the parameters of this estimator.

set_transform_request(*[, column])Configure whether metadata should be requested to be passed to the

transformmethod.transform(column)Transform a column.

- fit(column, y=None)[source]#

Fit the transformer.

Subclasses should implement

fit_transformandtransform.- Parameters:

- columna pandas or polars

Series Unlike most scikit-learn transformers, single-column transformers transform a single column, not a whole dataframe.

- ycolumn or dataframe

Prediction targets.

- columna pandas or polars

- Returns:

- self

The fitted transformer.

- get_feature_names_out(input_features=None)[source]#

Return a list of features generated by the transformer.

Each feature has format

{input_name}_{n_component}whereinput_nameis the name of the input column, or a default name for the encoder, andn_componentis the idx of the specific feature.

- set_params(**params)[source]#

Set the parameters of this estimator.

The method works on simple estimators as well as on nested objects (such as

Pipeline). The latter have parameters of the form<component>__<parameter>so that it’s possible to update each component of a nested object.- Parameters:

- **params

dict Estimator parameters.

- **params

- Returns:

- selfestimator instance

Estimator instance.

- set_transform_request(*, column='$UNCHANGED$')[source]#

Configure whether metadata should be requested to be passed to the

transformmethod.Note that this method is only relevant when this estimator is used as a sub-estimator within a meta-estimator and metadata routing is enabled with

enable_metadata_routing=True(seesklearn.set_config()). Please check the User Guide on how the routing mechanism works.The options for each parameter are:

True: metadata is requested, and passed totransformif provided. The request is ignored if metadata is not provided.False: metadata is not requested and the meta-estimator will not pass it totransform.None: metadata is not requested, and the meta-estimator will raise an error if the user provides it.str: metadata should be passed to the meta-estimator with this given alias instead of the original name.

The default (

sklearn.utils.metadata_routing.UNCHANGED) retains the existing request. This allows you to change the request for some parameters and not others.Added in version 1.3.

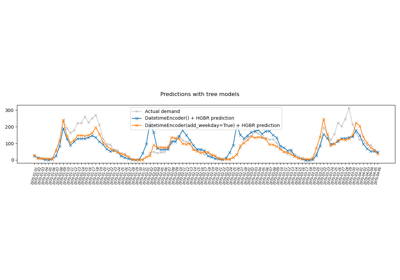

Gallery examples#

Handling datetime features with the DatetimeEncoder