Note

Go to the end to download the full example code. or to run this example in your browser via JupyterLite or Binder

AggJoiner on a credit fraud dataset#

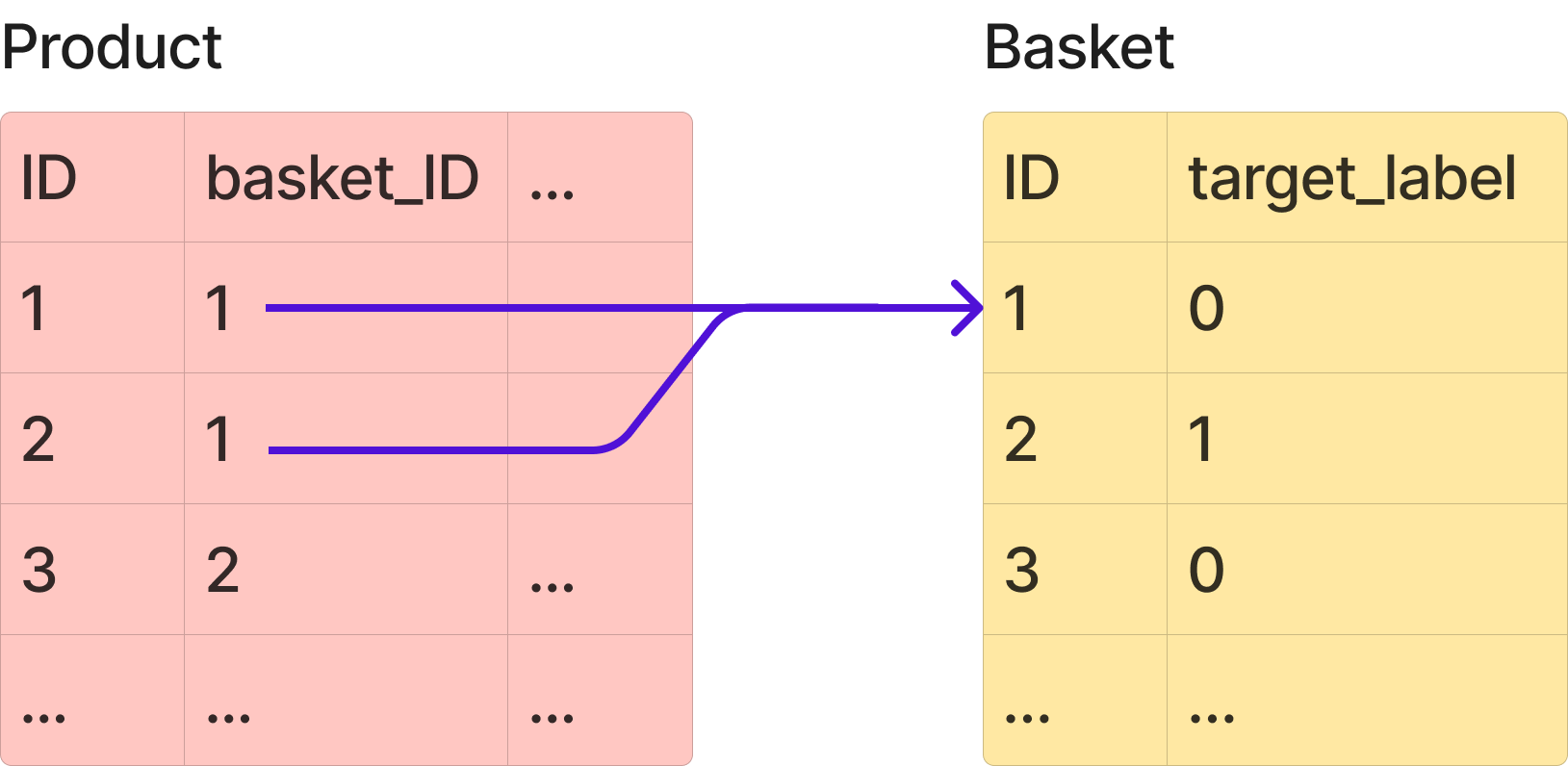

Many problems involve tables whose entities have a one-to-many relationship.

To simplify aggregate-then-join operations for machine learning, we can include

the AggJoiner in our pipeline.

In this example, we are tackling a fraudulent loan detection use case. Because fraud is rare, this dataset is extremely imbalanced, with a prevalence of around 1.4%.

The data consists of two distinct entities: e-commerce “baskets”, and “products”. Baskets can be tagged fraudulent (1) or not (0), and are essentially a list of products of variable size. Each basket is linked to at least one products, e.g. basket 1 can have product 1 and 2.

Our aim is to predict which baskets are fraudulent.

The products dataframe can be joined on the baskets dataframe using the basket_ID

column.

Each product has several attributes:

a category (marked by the column

"item"),a model (

"model"),a brand (

"make"),a merchant code (

"goods_code"),a price per unit (

"cash_price"),a quantity selected in the basket (

"Nbr_of_prod_purchas")

from skrub import TableReport

from skrub.datasets import fetch_credit_fraud

bunch = fetch_credit_fraud()

products, baskets = bunch.products, bunch.baskets

TableReport(products)

| basket_ID | item | cash_price | make | model | goods_code | Nbr_of_prod_purchas | |

|---|---|---|---|---|---|---|---|

| 1 | 51,113 | COMPUTER PERIPHERALS ACCESSORIES | 409 | APPLE | APPLE WATCH SERIES 6 GPS 44MM SPACE GREY ALUMINIUM | 239001518 | 1 |

| 9 | 41,798 | COMPUTERS | 1,187 | APPLE | 2020 APPLE MACBOOK PRO 13 TOUCH BAR M1 PROCESSOR 8 | 239246780 | 1 |

| 11 | 39,361 | COMPUTERS | 898 | APPLE | 2020 APPLE MACBOOK AIR 13 3 RETINA DISPLAY M1 PROC | 239246776 | 1 |

| 15 | 38,615 | COMPUTER PERIPHERALS ACCESSORIES | 379 | APPLE | APPLE WATCH SERIES 6 GPS 40MM BLUE ALUMINIUM CASE | 239001540 | 1 |

| 16 | 70,262 | COMPUTERS | 1,899 | APPLE | 2021 APPLE MACBOOK PRO 14 M1 PRO PROCESSOR 16GB RA | 240575990 | 1 |

| 163,352 | 42,613 | BEDROOM FURNITURE | 259 | SILENTNIGHT | SILENTNIGHT SLEEP GENIUS FULL HEIGHT HEADBOARD DOU | 236938439 | 1 |

| 163,353 | 42,613 | OUTDOOR FURNITURE | 949 | LG OUTDOOR | LG OUTDOOR BERGEN 2-SEAT GARDEN SIDE TABLE RECLINI | 239742814 | 1 |

| 163,354 | 43,567 | COMPUTERS | 1,099 | APPLE | 2021 APPLE IPAD PRO 12 9 M1 PROCESSOR IOS WI-FI 25 | 240040978 | 1 |

| 163,355 | 43,567 | COMPUTERS | 2,099 | APPLE | 2020 APPLE IMAC 27 ALL-IN-ONE INTEL CORE I7 8GB RA | 238923518 | 1 |

| 163,356 | 68,268 | TELEVISIONS HOME CINEMA | 799 | LG | LG OLED48A16LA 2021 OLED HDR 4K ULTRA HD SMART TV | 239866717 | 1 |

basket_ID

Int64DType- Null values

- 0 (0.0%)

- Unique values

-

61,241 (56.0%)

This column has a high cardinality (> 40).

- Mean ± Std

- 3.59e+04 ± 2.24e+04

- Median ± IQR

- 35,203 ± 39,444

- Min | Max

- 0 | 76,543

item

ObjectDType- Null values

- 0 (0.0%)

- Unique values

-

166 (0.2%)

This column has a high cardinality (> 40).

Most frequent values

COMPUTERS

FULFILMENT CHARGE

COMPUTER PERIPHERALS ACCESSORIES

TELEVISIONS HOME CINEMA

WARRANTY

LIVING DINING FURNITURE

TELEPHONES, FAX MACHINES & TWO-WAY RADIOS

BEDROOM FURNITURE

COMPUTER PERIPHERALS & ACCESSORIES

List:SERVICE

['COMPUTERS', 'FULFILMENT CHARGE', 'COMPUTER PERIPHERALS ACCESSORIES', 'TELEVISIONS HOME CINEMA', 'WARRANTY', 'LIVING DINING FURNITURE', 'TELEPHONES, FAX MACHINES & TWO-WAY RADIOS', 'BEDROOM FURNITURE', 'COMPUTER PERIPHERALS & ACCESSORIES', 'SERVICE']

cash_price

Int64DType- Null values

- 0 (0.0%)

- Unique values

-

1,280 (1.2%)

This column has a high cardinality (> 40).

- Mean ± Std

- 672. ± 714.

- Median ± IQR

- 499 ± 1,049

- Min | Max

- 0 | 18,349

make

ObjectDType- Null values

- 1,273 (1.2%)

- Unique values

-

690 (0.6%)

This column has a high cardinality (> 40).

Most frequent values

APPLE

RETAILER

LG

SAMSUNG

SONY

ANYDAY RETAILER

WEST ELM

SWOON

KETTLER

List:DYSON

['APPLE', 'RETAILER', 'LG', 'SAMSUNG', 'SONY', 'ANYDAY RETAILER', 'WEST ELM', 'SWOON', 'KETTLER', 'DYSON']

model

ObjectDType- Null values

- 1,273 (1.2%)

- Unique values

-

6,477 (5.9%)

This column has a high cardinality (> 40).

Most frequent values

RETAILER

2020 APPLE MACBOOK AIR 13 3 RETINA DISPLAY M1 PROC

2020 APPLE MACBOOK PRO 13 TOUCH BAR M1 PROCESSOR 8

2020 APPLE IPAD AIR 10 9 A14 BIONIC PROCESSOR IOS

2020 APPLE MACBOOK AIR

APPLE WATCH SERIES 6

APPLE PENCIL 2ND GENERATION 2018 MATTE WHITE

2021 APPLE IPAD PRO 11 M1 PROCESSOR IOS WI-FI 128G

2021 APPLE IPAD PRO 12 9 M1 PROCESSOR IOS WI-FI 25

List:2021 APPLE IPAD PRO 12 9 M1 PROCESSOR IOS WI-FI 12

['RETAILER', '2020 APPLE MACBOOK AIR 13 3 RETINA DISPLAY M1 PROC', '2020 APPLE MACBOOK PRO 13 TOUCH BAR M1 PROCESSOR 8', '2020 APPLE IPAD AIR 10 9 A14 BIONIC PROCESSOR IOS', '2020 APPLE MACBOOK AIR', 'APPLE WATCH SERIES 6', 'APPLE PENCIL 2ND GENERATION 2018 MATTE WHITE', '2021 APPLE IPAD PRO 11 M1 PROCESSOR IOS WI-FI 128G', '2021 APPLE IPAD PRO 12 9 M1 PROCESSOR IOS WI-FI 25', '2021 APPLE IPAD PRO 12 9 M1 PROCESSOR IOS WI-FI 12']

goods_code

ObjectDType- Null values

- 0 (0.0%)

- Unique values

-

10,738 (9.8%)

This column has a high cardinality (> 40).

Most frequent values

FULFILMENT

239246776

239246779

239246778

237841896

239246782

236604736

239246775

239827061

List:239246783

['FULFILMENT', '239246776', '239246779', '239246778', '237841896', '239246782', '236604736', '239246775', '239827061', '239246783']

Nbr_of_prod_purchas

Int64DType- Null values

- 0 (0.0%)

- Unique values

- 19 (< 0.1%)

- Mean ± Std

- 1.05 ± 0.426

- Median ± IQR

- 1 ± 0

- Min | Max

- 1 | 40

No columns match the selected filter: . You can change the column filter in the dropdown menu above.

|

Column

|

Column name

|

dtype

|

Is sorted

|

Null values

|

Unique values

|

Mean

|

Std

|

Min

|

Median

|

Max

|

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | basket_ID | Int64DType | False | 0 (0.0%) | 61241 (56.0%) | 3.59e+04 | 2.24e+04 | 0 | 35,203 | 76,543 |

| 1 | item | ObjectDType | False | 0 (0.0%) | 166 (0.2%) | |||||

| 2 | cash_price | Int64DType | False | 0 (0.0%) | 1280 (1.2%) | 672. | 714. | 0 | 499 | 18,349 |

| 3 | make | ObjectDType | False | 1273 (1.2%) | 690 (0.6%) | |||||

| 4 | model | ObjectDType | False | 1273 (1.2%) | 6477 (5.9%) | |||||

| 5 | goods_code | ObjectDType | False | 0 (0.0%) | 10738 (9.8%) | |||||

| 6 | Nbr_of_prod_purchas | Int64DType | False | 0 (0.0%) | 19 (< 0.1%) | 1.05 | 0.426 | 1 | 1 | 40 |

No columns match the selected filter: . You can change the column filter in the dropdown menu above.

basket_ID

Int64DType- Null values

- 0 (0.0%)

- Unique values

-

61,241 (56.0%)

This column has a high cardinality (> 40).

- Mean ± Std

- 3.59e+04 ± 2.24e+04

- Median ± IQR

- 35,203 ± 39,444

- Min | Max

- 0 | 76,543

item

ObjectDType- Null values

- 0 (0.0%)

- Unique values

-

166 (0.2%)

This column has a high cardinality (> 40).

Most frequent values

COMPUTERS

FULFILMENT CHARGE

COMPUTER PERIPHERALS ACCESSORIES

TELEVISIONS HOME CINEMA

WARRANTY

LIVING DINING FURNITURE

TELEPHONES, FAX MACHINES & TWO-WAY RADIOS

BEDROOM FURNITURE

COMPUTER PERIPHERALS & ACCESSORIES

List:SERVICE

['COMPUTERS', 'FULFILMENT CHARGE', 'COMPUTER PERIPHERALS ACCESSORIES', 'TELEVISIONS HOME CINEMA', 'WARRANTY', 'LIVING DINING FURNITURE', 'TELEPHONES, FAX MACHINES & TWO-WAY RADIOS', 'BEDROOM FURNITURE', 'COMPUTER PERIPHERALS & ACCESSORIES', 'SERVICE']

cash_price

Int64DType- Null values

- 0 (0.0%)

- Unique values

-

1,280 (1.2%)

This column has a high cardinality (> 40).

- Mean ± Std

- 672. ± 714.

- Median ± IQR

- 499 ± 1,049

- Min | Max

- 0 | 18,349

make

ObjectDType- Null values

- 1,273 (1.2%)

- Unique values

-

690 (0.6%)

This column has a high cardinality (> 40).

Most frequent values

APPLE

RETAILER

LG

SAMSUNG

SONY

ANYDAY RETAILER

WEST ELM

SWOON

KETTLER

List:DYSON

['APPLE', 'RETAILER', 'LG', 'SAMSUNG', 'SONY', 'ANYDAY RETAILER', 'WEST ELM', 'SWOON', 'KETTLER', 'DYSON']

model

ObjectDType- Null values

- 1,273 (1.2%)

- Unique values

-

6,477 (5.9%)

This column has a high cardinality (> 40).

Most frequent values

RETAILER

2020 APPLE MACBOOK AIR 13 3 RETINA DISPLAY M1 PROC

2020 APPLE MACBOOK PRO 13 TOUCH BAR M1 PROCESSOR 8

2020 APPLE IPAD AIR 10 9 A14 BIONIC PROCESSOR IOS

2020 APPLE MACBOOK AIR

APPLE WATCH SERIES 6

APPLE PENCIL 2ND GENERATION 2018 MATTE WHITE

2021 APPLE IPAD PRO 11 M1 PROCESSOR IOS WI-FI 128G

2021 APPLE IPAD PRO 12 9 M1 PROCESSOR IOS WI-FI 25

List:2021 APPLE IPAD PRO 12 9 M1 PROCESSOR IOS WI-FI 12

['RETAILER', '2020 APPLE MACBOOK AIR 13 3 RETINA DISPLAY M1 PROC', '2020 APPLE MACBOOK PRO 13 TOUCH BAR M1 PROCESSOR 8', '2020 APPLE IPAD AIR 10 9 A14 BIONIC PROCESSOR IOS', '2020 APPLE MACBOOK AIR', 'APPLE WATCH SERIES 6', 'APPLE PENCIL 2ND GENERATION 2018 MATTE WHITE', '2021 APPLE IPAD PRO 11 M1 PROCESSOR IOS WI-FI 128G', '2021 APPLE IPAD PRO 12 9 M1 PROCESSOR IOS WI-FI 25', '2021 APPLE IPAD PRO 12 9 M1 PROCESSOR IOS WI-FI 12']

goods_code

ObjectDType- Null values

- 0 (0.0%)

- Unique values

-

10,738 (9.8%)

This column has a high cardinality (> 40).

Most frequent values

FULFILMENT

239246776

239246779

239246778

237841896

239246782

236604736

239246775

239827061

List:239246783

['FULFILMENT', '239246776', '239246779', '239246778', '237841896', '239246782', '236604736', '239246775', '239827061', '239246783']

Nbr_of_prod_purchas

Int64DType- Null values

- 0 (0.0%)

- Unique values

- 19 (< 0.1%)

- Mean ± Std

- 1.05 ± 0.426

- Median ± IQR

- 1 ± 0

- Min | Max

- 1 | 40

No columns match the selected filter: . You can change the column filter in the dropdown menu above.

| Column 1 | Column 2 | Cramér's V | Pearson's Correlation |

|---|---|---|---|

| model | goods_code | 0.612 | |

| item | model | 0.480 | |

| item | make | 0.465 | |

| item | goods_code | 0.436 | |

| make | model | 0.312 | |

| make | goods_code | 0.256 | |

| basket_ID | item | 0.207 | |

| basket_ID | model | 0.205 | |

| cash_price | make | 0.139 | |

| basket_ID | make | 0.137 | |

| item | cash_price | 0.133 | |

| make | Nbr_of_prod_purchas | 0.123 | |

| basket_ID | goods_code | 0.108 | |

| item | Nbr_of_prod_purchas | 0.0977 | |

| cash_price | model | 0.0917 | |

| basket_ID | cash_price | 0.0729 | 0.120 |

| cash_price | goods_code | 0.0685 | |

| goods_code | Nbr_of_prod_purchas | 0.0460 | |

| basket_ID | Nbr_of_prod_purchas | 0.0455 | -0.0328 |

| model | Nbr_of_prod_purchas | 0.0378 |

Please enable javascript

The skrub table reports need javascript to display correctly. If you are displaying a report in a Jupyter notebook and you see this message, you may need to re-execute the cell or to trust the notebook (button on the top right or "File > Trust notebook").

| ID | fraud_flag | |

|---|---|---|

| 1 | 51,113 | 0 |

| 7 | 41,798 | 0 |

| 9 | 39,361 | 0 |

| 13 | 38,615 | 0 |

| 14 | 70,262 | 0 |

| 92,785 | 21,243 | 0 |

| 92,786 | 45,891 | 0 |

| 92,787 | 42,613 | 0 |

| 92,788 | 43,567 | 0 |

| 92,789 | 68,268 | 0 |

ID

Int64DType- Null values

- 0 (0.0%)

- Unique values

-

61,241 (100.0%)

This column has a high cardinality (> 40).

- Mean ± Std

- 3.82e+04 ± 2.21e+04

- Median ± IQR

- 38,158 ± 38,196

- Min | Max

- 0 | 76,543

fraud_flag

Int64DType- Null values

- 0 (0.0%)

- Unique values

- 2 (< 0.1%)

- Mean ± Std

- 0.0130 ± 0.113

- Median ± IQR

- 0 ± 0

- Min | Max

- 0 | 1

No columns match the selected filter: . You can change the column filter in the dropdown menu above.

|

Column

|

Column name

|

dtype

|

Is sorted

|

Null values

|

Unique values

|

Mean

|

Std

|

Min

|

Median

|

Max

|

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | ID | Int64DType | False | 0 (0.0%) | 61241 (100.0%) | 3.82e+04 | 2.21e+04 | 0 | 38,158 | 76,543 |

| 1 | fraud_flag | Int64DType | False | 0 (0.0%) | 2 (< 0.1%) | 0.0130 | 0.113 | 0 | 0 | 1 |

No columns match the selected filter: . You can change the column filter in the dropdown menu above.

ID

Int64DType- Null values

- 0 (0.0%)

- Unique values

-

61,241 (100.0%)

This column has a high cardinality (> 40).

- Mean ± Std

- 3.82e+04 ± 2.21e+04

- Median ± IQR

- 38,158 ± 38,196

- Min | Max

- 0 | 76,543

fraud_flag

Int64DType- Null values

- 0 (0.0%)

- Unique values

- 2 (< 0.1%)

- Mean ± Std

- 0.0130 ± 0.113

- Median ± IQR

- 0 ± 0

- Min | Max

- 0 | 1

No columns match the selected filter: . You can change the column filter in the dropdown menu above.

| Column 1 | Column 2 | Cramér's V | Pearson's Correlation |

|---|---|---|---|

| ID | fraud_flag | 0.0709 | 0.0161 |

Please enable javascript

The skrub table reports need javascript to display correctly. If you are displaying a report in a Jupyter notebook and you see this message, you may need to re-execute the cell or to trust the notebook (button on the top right or "File > Trust notebook").

Naive aggregation#

Let’s explore a naive solution first.

Note

Click here to skip this section and see the AggJoiner in action!

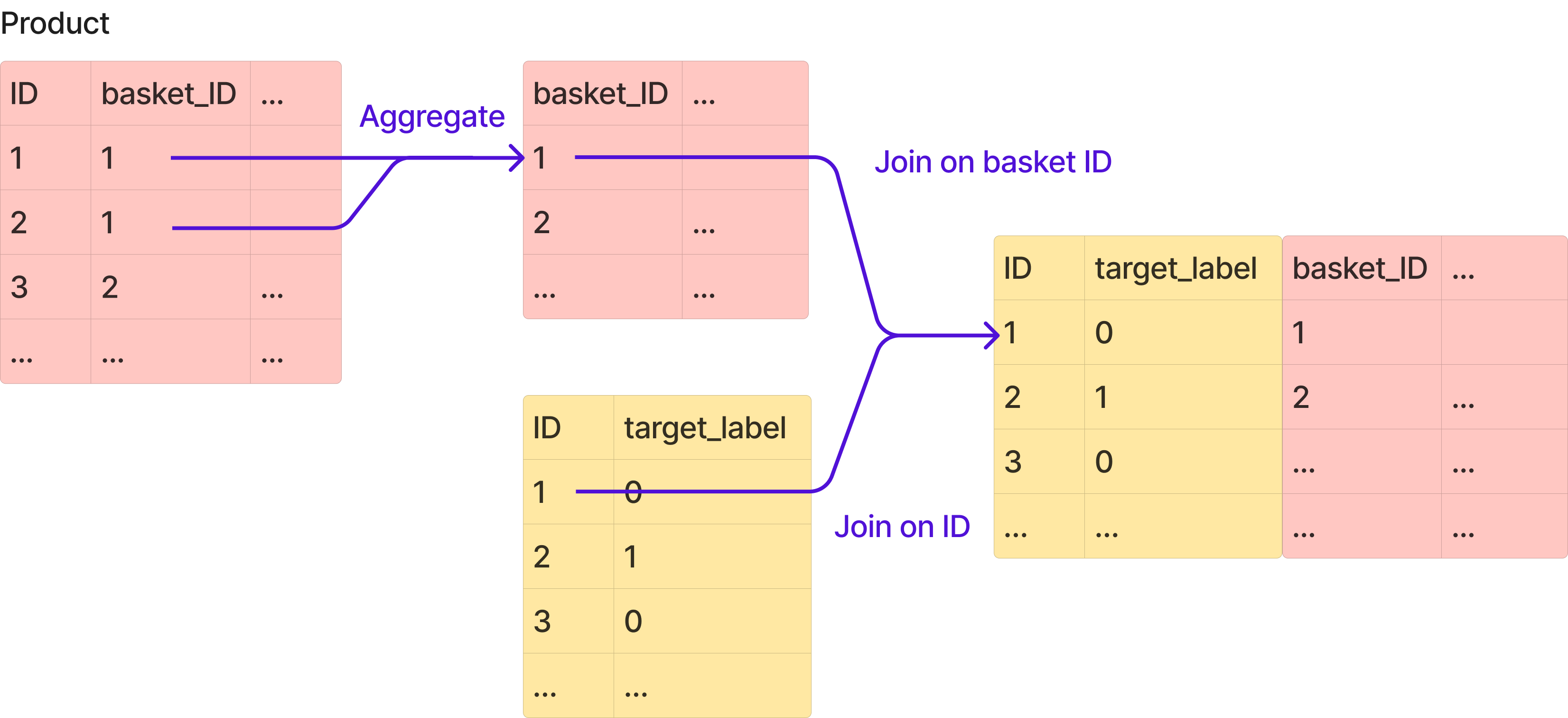

The first idea that comes to mind to merge these two tables is to aggregate the products attributes into lists, using their basket IDs.

products_grouped = products.groupby("basket_ID").agg(list)

TableReport(products_grouped)

| basket_ID | item | cash_price | make | model | goods_code | Nbr_of_prod_purchas |

|---|---|---|---|---|---|---|

| 0 | ['COMPUTERS', 'WARRANTY', 'FULFILMENT CHARGE'] | [1249, 35, 11] | ['APPLE', 'RETAILER', 'RETAILER'] | ['2021 APPLE IMAC 24 ALL-IN-ONE M1 PROCESSOR 8GB RAM', 'RETAILER', 'RETAILER'] | ['240040969', '236604727', 'FULFILMENT'] | [1, 1, 1] |

| 1 | ['OUTDOOR ACCESSORIES', 'OUTDOOR FURNITURE'] | [679, 369] | ['KETTLER', 'RETAILER'] | ['RETAILER', 'RETAILER'] | ['237874616', '238222170'] | [1, 1] |

| 2 | ['OUTDOOR FURNITURE', 'OUTDOOR FURNITURE'] | [1879, 110] | ['KETTLER', 'KETTLER'] | ['RETAILER', 'RETAILER'] | ['239482916', '235452317'] | [1, 1] |

| 4 | ['TELEPHONES, FAX MACHINES & TWO-WAY RADIOS', 'FULFILMENT CHARGE'] | [999, 0] | ['APPLE', 'RETAILER'] | ['APPLE IPHONE 12 PRO', 'RETAILER'] | ['239091969', 'FULFILMENT'] | [1, 1] |

| 5 | ['LIVING & DINING FURNITURE'] | [749] | ['RETAILER'] | ['RETAILER'] | ['238000174'] | [1] |

| 76,538 | ['HOT DRINK PREPARATION', 'BARWARE', 'KITCHEN SCALES MEASURES', 'KITCHEN SCALES MEASURES', 'WINDOW DRESSING', 'LIVING DINING FURNITURE', 'SERVICE', 'LIVING DINING FURNITURE'] | [6, 5, 2, 1, 120, 1549, 0, 1349] | ['RETAILER', 'RETAILER', 'ANYDAY RETAILER', 'ANYDAY RETAILER', 'RETAILER', 'RETAILER', 'RETAILER', 'RETAILER'] | ['RETAILER TEA STRAINER WITH STAND', 'RETAILER DOUBLE JIGGER', 'ANYDAY RETAILER PLASTIC MEASURING JUG 1', 'ANYDAY RETAILER PLASTIC MEASURING JUG 5', 'RETAILER RONA PAIR LINED EYELET CURTAIN', 'RETAILER BARBICAN LARGE 3 SEATER SOFA L', 'RETAILER', 'RETAILER BARBICAN MEDIUM 2 SEATER SOFA'] | ['231251059', '231034699', '236902782', '236902832', '237968549', '237013495', 'DMS4462', '237013514'] | [1, 1, 1, 1, 1, 1, 1, 1] |

| 76,539 | ['AUDIO ACCESSORIES', 'WARRANTY', 'HEALTH BEAUTY ELECTRICAL', 'WARRANTY'] | [140, 20, 357, 15] | ['APPLE', 'RETAILER', 'DYSON', 'RETAILER'] | ['2021 APPLE AIRPODS WITH MAGSAFE CHARGING CASE 3RD', 'RETAILER', 'DYSON CORRALE CORD-FREE HAIR STRAIGHTENERS', 'RETAILER'] | ['240575988', '236604738', '238602413', '237371145'] | [1, 1, 1, 1] |

| 76,540 | ['COMPUTER PERIPHERALS ACCESSORIES'] | [399] | ['APPLE'] | ['APPLE WATCH NIKE SERIES 7 GPS 45MM MIDNIGHT ALUMIN'] | ['240382077'] | [1] |

| 76,541 | ['BEDROOM FURNITURE', 'SERVICE', 'BEDROOM FURNITURE', 'BEDROOM FURNITURE', 'SERVICE', 'SERVICE', 'BED LINEN', 'BEDROOM FURNITURE'] | [1519, 30, 279, 339, 30, 0, 26, 749] | ['RETAILER', 'RETAILER', 'RETAILER', 'SILENTNIGHT', 'RETAILER', 'RETAILER', 'RETAILER', 'RETAILER'] | ['RETAILER LUXURY NATURAL COLLECTION BRIT', 'RETAILER', 'RETAILER CLASSIC ECO 800 POCKET SPRING', 'SILENTNIGHT NON SPRUNG 2 DRAWER DIVAN STORAGE BED', 'RETAILER', 'RETAILER', 'RETAILER NATURAL COTTON QUILTED MATTRES', 'RETAILER ROUEN OTTOMAN STORAGE UPHOLSTE'] | ['240361566', 'DMS22', '240108867', '236938413', 'DMS22', 'DMS4463', '231083318', '238088761'] | [1, 1, 1, 1, 1, 1, 1, 1] |

| 76,543 | ['COMPUTERS', 'FULFILMENT CHARGE'] | [1649, 7] | ['APPLE', 'RETAILER'] | ['2021 APPLE IMAC 24 ALL-IN-ONE M1 PROCESSOR 8GB RAM', 'RETAILER'] | ['240040968', 'FULFILMENT'] | [1, 1] |

item

ObjectDType- Null values

- 0 (0.0%)

- Unique values

- 3,181 (5.2%)

Most frequent values

['COMPUTERS']

['COMPUTERS', 'FULFILMENT CHARGE']

['TELEVISIONS HOME CINEMA']

['COMPUTER PERIPHERALS ACCESSORIES']

['TELEPHONES, FAX MACHINES & TWO-WAY RADIOS', 'FULFILMENT CHARGE']

['COMPUTERS', 'WARRANTY']

['COMPUTER PERIPHERALS & ACCESSORIES', 'FULFILMENT CHARGE']

['COMPUTERS', 'COMPUTER PERIPHERALS ACCESSORIES']

['LIVING DINING FURNITURE']

['COMPUTER PERIPHERALS ACCESSORIES', 'FULFILMENT CHARGE']

[['COMPUTERS'], ['COMPUTERS', 'FULFILMENT CHARGE'], ['TELEVISIONS HOME CINEMA'], ['COMPUTER PERIPHERALS ACCESSORIES'], ['TELEPHONES, FAX MACHINES & TWO-WAY RADIOS', 'FULFILMENT CHARGE'], ['COMPUTERS', 'WARRANTY'], ['COMPUTER PERIPHERALS & ACCESSORIES', 'FULFILMENT CHARGE'], ['COMPUTERS', 'COMPUTER PERIPHERALS ACCESSORIES'], ['LIVING DINING FURNITURE'], ['COMPUTER PERIPHERALS ACCESSORIES', 'FULFILMENT CHARGE']]

cash_price

ObjectDType- Null values

- 0 (0.0%)

- Unique values

- 10,884 (17.8%)

Most frequent values

[949]

[1099]

[1187]

[379]

[899]

[999]

[399]

[898]

[369]

[749]

[[949], [1099], [1187], [379], [899], [999], [399], [898], [369], [749]]

make

ObjectDType- Null values

- 0 (0.0%)

- Unique values

- 2,651 (4.3%)

Most frequent values

['APPLE']

['APPLE', 'RETAILER']

['LG']

['APPLE', 'APPLE']

['SAMSUNG']

['LG', 'RETAILER']

['APPLE', 'APPLE', 'RETAILER']

['APPLE', 'RETAILER', 'RETAILER']

['SONY']

['RETAILER', 'RETAILER']

[['APPLE'], ['APPLE', 'RETAILER'], ['LG'], ['APPLE', 'APPLE'], ['SAMSUNG'], ['LG', 'RETAILER'], ['APPLE', 'APPLE', 'RETAILER'], ['APPLE', 'RETAILER', 'RETAILER'], ['SONY'], ['RETAILER', 'RETAILER']]

model

ObjectDType- Null values

- 0 (0.0%)

- Unique values

- 8,182 (13.4%)

Most frequent values

['2020 APPLE MACBOOK AIR 13 3 RETINA DISPLAY M1 PROC']

['2020 APPLE MACBOOK AIR 13 3 RETINA DISPLAY M1 PROC', 'RETAILER']

['2020 APPLE MACBOOK PRO 13 TOUCH BAR M1 PROCESSOR 8']

['2020 APPLE MACBOOK AIR', 'RETAILER']

['2020 APPLE IPAD AIR 10 9 A14 BIONIC PROCESSOR IOS']

['APPLE WATCH SERIES 6', 'RETAILER']

['2020 APPLE MACBOOK PRO 13 TOUCH BAR M1 PROCESSOR 8', 'RETAILER']

['RETAILER', 'RETAILER']

['LG OLED55C14LB 2021 OLED HDR 4K ULTRA HD SMART TV']

['2020 APPLE MACBOOK PRO', 'RETAILER']

[['2020 APPLE MACBOOK AIR 13 3 RETINA DISPLAY M1 PROC'], ['2020 APPLE MACBOOK AIR 13 3 RETINA DISPLAY M1 PROC', 'RETAILER'], ['2020 APPLE MACBOOK PRO 13 TOUCH BAR M1 PROCESSOR 8'], ['2020 APPLE MACBOOK AIR', 'RETAILER'], ['2020 APPLE IPAD AIR 10 9 A14 BIONIC PROCESSOR IOS'], ['APPLE WATCH SERIES 6', 'RETAILER'], ['2020 APPLE MACBOOK PRO 13 TOUCH BAR M1 PROCESSOR 8', 'RETAILER'], ['RETAILER', 'RETAILER'], ['LG OLED55C14LB 2021 OLED HDR 4K ULTRA HD SMART TV'], ['2020 APPLE MACBOOK PRO', 'RETAILER']]

goods_code

ObjectDType- Null values

- 0 (0.0%)

- Unique values

- 12,554 (20.5%)

Most frequent values

['239246776']

['239246779']

['239246776', 'FULFILMENT']

['239246778']

['239246782']

['239827061']

['239246779', 'FULFILMENT']

['239246775']

['239827062']

['239246778', 'FULFILMENT']

[['239246776'], ['239246779'], ['239246776', 'FULFILMENT'], ['239246778'], ['239246782'], ['239827061'], ['239246779', 'FULFILMENT'], ['239246775'], ['239827062'], ['239246778', 'FULFILMENT']]

Nbr_of_prod_purchas

ObjectDType- Null values

- 0 (0.0%)

- Unique values

- 596 (1.0%)

Most frequent values

[1]

[1, 1]

[1, 1, 1]

[1, 1, 1, 1]

[1, 1, 1, 1, 1]

[2]

[2, 1]

[1, 1, 1, 1, 1, 1]

[1, 2]

[1, 2, 1]

[[1], [1, 1], [1, 1, 1], [1, 1, 1, 1], [1, 1, 1, 1, 1], [2], [2, 1], [1, 1, 1, 1, 1, 1], [1, 2], [1, 2, 1]]

No columns match the selected filter: . You can change the column filter in the dropdown menu above.

|

Column

|

Column name

|

dtype

|

Is sorted

|

Null values

|

Unique values

|

Mean

|

Std

|

Min

|

Median

|

Max

|

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | item | ObjectDType | False | 0 (0.0%) | 3181 (5.2%) | |||||

| 1 | cash_price | ObjectDType | False | 0 (0.0%) | 10884 (17.8%) | |||||

| 2 | make | ObjectDType | False | 0 (0.0%) | 2651 (4.3%) | |||||

| 3 | model | ObjectDType | False | 0 (0.0%) | 8182 (13.4%) | |||||

| 4 | goods_code | ObjectDType | False | 0 (0.0%) | 12554 (20.5%) | |||||

| 5 | Nbr_of_prod_purchas | ObjectDType | False | 0 (0.0%) | 596 (1.0%) |

No columns match the selected filter: . You can change the column filter in the dropdown menu above.

item

ObjectDType- Null values

- 0 (0.0%)

- Unique values

- 3,181 (5.2%)

Most frequent values

['COMPUTERS']

['COMPUTERS', 'FULFILMENT CHARGE']

['TELEVISIONS HOME CINEMA']

['COMPUTER PERIPHERALS ACCESSORIES']

['TELEPHONES, FAX MACHINES & TWO-WAY RADIOS', 'FULFILMENT CHARGE']

['COMPUTERS', 'WARRANTY']

['COMPUTER PERIPHERALS & ACCESSORIES', 'FULFILMENT CHARGE']

['COMPUTERS', 'COMPUTER PERIPHERALS ACCESSORIES']

['LIVING DINING FURNITURE']

['COMPUTER PERIPHERALS ACCESSORIES', 'FULFILMENT CHARGE']

[['COMPUTERS'], ['COMPUTERS', 'FULFILMENT CHARGE'], ['TELEVISIONS HOME CINEMA'], ['COMPUTER PERIPHERALS ACCESSORIES'], ['TELEPHONES, FAX MACHINES & TWO-WAY RADIOS', 'FULFILMENT CHARGE'], ['COMPUTERS', 'WARRANTY'], ['COMPUTER PERIPHERALS & ACCESSORIES', 'FULFILMENT CHARGE'], ['COMPUTERS', 'COMPUTER PERIPHERALS ACCESSORIES'], ['LIVING DINING FURNITURE'], ['COMPUTER PERIPHERALS ACCESSORIES', 'FULFILMENT CHARGE']]

cash_price

ObjectDType- Null values

- 0 (0.0%)

- Unique values

- 10,884 (17.8%)

Most frequent values

[949]

[1099]

[1187]

[379]

[899]

[999]

[399]

[898]

[369]

[749]

[[949], [1099], [1187], [379], [899], [999], [399], [898], [369], [749]]

make

ObjectDType- Null values

- 0 (0.0%)

- Unique values

- 2,651 (4.3%)

Most frequent values

['APPLE']

['APPLE', 'RETAILER']

['LG']

['APPLE', 'APPLE']

['SAMSUNG']

['LG', 'RETAILER']

['APPLE', 'APPLE', 'RETAILER']

['APPLE', 'RETAILER', 'RETAILER']

['SONY']

['RETAILER', 'RETAILER']

[['APPLE'], ['APPLE', 'RETAILER'], ['LG'], ['APPLE', 'APPLE'], ['SAMSUNG'], ['LG', 'RETAILER'], ['APPLE', 'APPLE', 'RETAILER'], ['APPLE', 'RETAILER', 'RETAILER'], ['SONY'], ['RETAILER', 'RETAILER']]

model

ObjectDType- Null values

- 0 (0.0%)

- Unique values

- 8,182 (13.4%)

Most frequent values

['2020 APPLE MACBOOK AIR 13 3 RETINA DISPLAY M1 PROC']

['2020 APPLE MACBOOK AIR 13 3 RETINA DISPLAY M1 PROC', 'RETAILER']

['2020 APPLE MACBOOK PRO 13 TOUCH BAR M1 PROCESSOR 8']

['2020 APPLE MACBOOK AIR', 'RETAILER']

['2020 APPLE IPAD AIR 10 9 A14 BIONIC PROCESSOR IOS']

['APPLE WATCH SERIES 6', 'RETAILER']

['2020 APPLE MACBOOK PRO 13 TOUCH BAR M1 PROCESSOR 8', 'RETAILER']

['RETAILER', 'RETAILER']

['LG OLED55C14LB 2021 OLED HDR 4K ULTRA HD SMART TV']

['2020 APPLE MACBOOK PRO', 'RETAILER']

[['2020 APPLE MACBOOK AIR 13 3 RETINA DISPLAY M1 PROC'], ['2020 APPLE MACBOOK AIR 13 3 RETINA DISPLAY M1 PROC', 'RETAILER'], ['2020 APPLE MACBOOK PRO 13 TOUCH BAR M1 PROCESSOR 8'], ['2020 APPLE MACBOOK AIR', 'RETAILER'], ['2020 APPLE IPAD AIR 10 9 A14 BIONIC PROCESSOR IOS'], ['APPLE WATCH SERIES 6', 'RETAILER'], ['2020 APPLE MACBOOK PRO 13 TOUCH BAR M1 PROCESSOR 8', 'RETAILER'], ['RETAILER', 'RETAILER'], ['LG OLED55C14LB 2021 OLED HDR 4K ULTRA HD SMART TV'], ['2020 APPLE MACBOOK PRO', 'RETAILER']]

goods_code

ObjectDType- Null values

- 0 (0.0%)

- Unique values

- 12,554 (20.5%)

Most frequent values

['239246776']

['239246779']

['239246776', 'FULFILMENT']

['239246778']

['239246782']

['239827061']

['239246779', 'FULFILMENT']

['239246775']

['239827062']

['239246778', 'FULFILMENT']

[['239246776'], ['239246779'], ['239246776', 'FULFILMENT'], ['239246778'], ['239246782'], ['239827061'], ['239246779', 'FULFILMENT'], ['239246775'], ['239827062'], ['239246778', 'FULFILMENT']]

Nbr_of_prod_purchas

ObjectDType- Null values

- 0 (0.0%)

- Unique values

- 596 (1.0%)

Most frequent values

[1]

[1, 1]

[1, 1, 1]

[1, 1, 1, 1]

[1, 1, 1, 1, 1]

[2]

[2, 1]

[1, 1, 1, 1, 1, 1]

[1, 2]

[1, 2, 1]

[[1], [1, 1], [1, 1, 1], [1, 1, 1, 1], [1, 1, 1, 1, 1], [2], [2, 1], [1, 1, 1, 1, 1, 1], [1, 2], [1, 2, 1]]

No columns match the selected filter: . You can change the column filter in the dropdown menu above.

| Column 1 | Column 2 | Cramér's V | Pearson's Correlation |

|---|---|---|---|

| model | goods_code | 0.552 | |

| item | make | 0.545 | |

| cash_price | goods_code | 0.507 | |

| item | model | 0.462 | |

| make | Nbr_of_prod_purchas | 0.432 | |

| item | Nbr_of_prod_purchas | 0.349 | |

| make | model | 0.338 | |

| item | cash_price | 0.319 | |

| item | goods_code | 0.301 | |

| make | goods_code | 0.294 | |

| cash_price | model | 0.281 | |

| model | Nbr_of_prod_purchas | 0.205 | |

| cash_price | make | 0.203 | |

| goods_code | Nbr_of_prod_purchas | 0.157 | |

| cash_price | Nbr_of_prod_purchas | 0.155 |

Please enable javascript

The skrub table reports need javascript to display correctly. If you are displaying a report in a Jupyter notebook and you see this message, you may need to re-execute the cell or to trust the notebook (button on the top right or "File > Trust notebook").

Then, we can expand all lists into columns, as if we were “flattening” the dataframe.

We end up with a products dataframe ready to be joined on the baskets dataframe, using

"basket_ID" as the join key.

import pandas as pd

products_flatten = []

for col in products_grouped.columns:

cols = [f"{col}{idx}" for idx in range(24)]

products_flatten.append(pd.DataFrame(products_grouped[col].to_list(), columns=cols))

products_flatten = pd.concat(products_flatten, axis=1)

products_flatten.insert(0, "basket_ID", products_grouped.index)

TableReport(products_flatten)

| basket_ID | item0 | item1 | item2 | item3 | item4 | item5 | item6 | item7 | item8 | item9 | item10 | item11 | item12 | item13 | item14 | item15 | item16 | item17 | item18 | item19 | item20 | item21 | item22 | item23 | cash_price0 | cash_price1 | cash_price2 | cash_price3 | cash_price4 | cash_price5 | cash_price6 | cash_price7 | cash_price8 | cash_price9 | cash_price10 | cash_price11 | cash_price12 | cash_price13 | cash_price14 | cash_price15 | cash_price16 | cash_price17 | cash_price18 | cash_price19 | cash_price20 | cash_price21 | cash_price22 | cash_price23 | make0 | make1 | make2 | make3 | make4 | make5 | make6 | make7 | make8 | make9 | make10 | make11 | make12 | make13 | make14 | make15 | make16 | make17 | make18 | make19 | make20 | make21 | make22 | make23 | model0 | model1 | model2 | model3 | model4 | model5 | model6 | model7 | model8 | model9 | model10 | model11 | model12 | model13 | model14 | model15 | model16 | model17 | model18 | model19 | model20 | model21 | model22 | model23 | goods_code0 | goods_code1 | goods_code2 | goods_code3 | goods_code4 | goods_code5 | goods_code6 | goods_code7 | goods_code8 | goods_code9 | goods_code10 | goods_code11 | goods_code12 | goods_code13 | goods_code14 | goods_code15 | goods_code16 | goods_code17 | goods_code18 | goods_code19 | goods_code20 | goods_code21 | goods_code22 | goods_code23 | Nbr_of_prod_purchas0 | Nbr_of_prod_purchas1 | Nbr_of_prod_purchas2 | Nbr_of_prod_purchas3 | Nbr_of_prod_purchas4 | Nbr_of_prod_purchas5 | Nbr_of_prod_purchas6 | Nbr_of_prod_purchas7 | Nbr_of_prod_purchas8 | Nbr_of_prod_purchas9 | Nbr_of_prod_purchas10 | Nbr_of_prod_purchas11 | Nbr_of_prod_purchas12 | Nbr_of_prod_purchas13 | Nbr_of_prod_purchas14 | Nbr_of_prod_purchas15 | Nbr_of_prod_purchas16 | Nbr_of_prod_purchas17 | Nbr_of_prod_purchas18 | Nbr_of_prod_purchas19 | Nbr_of_prod_purchas20 | Nbr_of_prod_purchas21 | Nbr_of_prod_purchas22 | Nbr_of_prod_purchas23 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | COMPUTERS | WARRANTY | FULFILMENT CHARGE | 1,249 | 35.0 | 11.0 | APPLE | RETAILER | RETAILER | 2021 APPLE IMAC 24 ALL-IN-ONE M1 PROCESSOR 8GB RAM | RETAILER | RETAILER | 240040969 | 236604727 | FULFILMENT | 1 | 1.00 | 1.00 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 1 | 1 | OUTDOOR ACCESSORIES | OUTDOOR FURNITURE | 679 | 369. | KETTLER | RETAILER | RETAILER | RETAILER | 237874616 | 238222170 | 1 | 1.00 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 2 | 2 | OUTDOOR FURNITURE | OUTDOOR FURNITURE | 1,879 | 110. | KETTLER | KETTLER | RETAILER | RETAILER | 239482916 | 235452317 | 1 | 1.00 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 3 | 4 | TELEPHONES, FAX MACHINES & TWO-WAY RADIOS | FULFILMENT CHARGE | 999 | 0.00 | APPLE | RETAILER | APPLE IPHONE 12 PRO | RETAILER | 239091969 | FULFILMENT | 1 | 1.00 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 4 | 5 | LIVING & DINING FURNITURE | 749 | RETAILER | RETAILER | 238000174 | 1 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 61,236 | 76,538 | HOT DRINK PREPARATION | BARWARE | KITCHEN SCALES MEASURES | KITCHEN SCALES MEASURES | WINDOW DRESSING | LIVING DINING FURNITURE | SERVICE | LIVING DINING FURNITURE | 6 | 5.00 | 2.00 | 1.00 | 120. | 1.55e+03 | 0.00 | 1.35e+03 | RETAILER | RETAILER | ANYDAY RETAILER | ANYDAY RETAILER | RETAILER | RETAILER | RETAILER | RETAILER | RETAILER TEA STRAINER WITH STAND | RETAILER DOUBLE JIGGER | ANYDAY RETAILER PLASTIC MEASURING JUG 1 | ANYDAY RETAILER PLASTIC MEASURING JUG 5 | RETAILER RONA PAIR LINED EYELET CURTAIN | RETAILER BARBICAN LARGE 3 SEATER SOFA L | RETAILER | RETAILER BARBICAN MEDIUM 2 SEATER SOFA | 231251059 | 231034699 | 236902782 | 236902832 | 237968549 | 237013495 | DMS4462 | 237013514 | 1 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 61,237 | 76,539 | AUDIO ACCESSORIES | WARRANTY | HEALTH BEAUTY ELECTRICAL | WARRANTY | 140 | 20.0 | 357. | 15.0 | APPLE | RETAILER | DYSON | RETAILER | 2021 APPLE AIRPODS WITH MAGSAFE CHARGING CASE 3RD | RETAILER | DYSON CORRALE CORD-FREE HAIR STRAIGHTENERS | RETAILER | 240575988 | 236604738 | 238602413 | 237371145 | 1 | 1.00 | 1.00 | 1.00 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 61,238 | 76,540 | COMPUTER PERIPHERALS ACCESSORIES | 399 | APPLE | APPLE WATCH NIKE SERIES 7 GPS 45MM MIDNIGHT ALUMIN | 240382077 | 1 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 61,239 | 76,541 | BEDROOM FURNITURE | SERVICE | BEDROOM FURNITURE | BEDROOM FURNITURE | SERVICE | SERVICE | BED LINEN | BEDROOM FURNITURE | 1,519 | 30.0 | 279. | 339. | 30.0 | 0.00 | 26.0 | 749. | RETAILER | RETAILER | RETAILER | SILENTNIGHT | RETAILER | RETAILER | RETAILER | RETAILER | RETAILER LUXURY NATURAL COLLECTION BRIT | RETAILER | RETAILER CLASSIC ECO 800 POCKET SPRING | SILENTNIGHT NON SPRUNG 2 DRAWER DIVAN STORAGE BED | RETAILER | RETAILER | RETAILER NATURAL COTTON QUILTED MATTRES | RETAILER ROUEN OTTOMAN STORAGE UPHOLSTE | 240361566 | DMS22 | 240108867 | 236938413 | DMS22 | DMS4463 | 231083318 | 238088761 | 1 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 61,240 | 76,543 | COMPUTERS | FULFILMENT CHARGE | 1,649 | 7.00 | APPLE | RETAILER | 2021 APPLE IMAC 24 ALL-IN-ONE M1 PROCESSOR 8GB RAM | RETAILER | 240040968 | FULFILMENT | 1 | 1.00 |

basket_ID

Int64DType- Null values

- 0 (0.0%)

- Unique values

-

61,241 (100.0%)

This column has a high cardinality (> 40).

- Mean ± Std

- 3.82e+04 ± 2.21e+04

- Median ± IQR

- 38,158 ± 38,196

- Min | Max

- 0 | 76,543

item0

ObjectDType- Null values

- 0 (0.0%)

- Unique values

-

133 (0.2%)

This column has a high cardinality (> 40).

item1

ObjectDType- Null values

- 30,267 (49.4%)

- Unique values

-

130 (0.2%)

This column has a high cardinality (> 40).

item2

ObjectDType- Null values

- 52,467 (85.7%)

- Unique values

-

121 (0.2%)

This column has a high cardinality (> 40).

item3

ObjectDType- Null values

- 58,223 (95.1%)

- Unique values

-

119 (0.2%)

This column has a high cardinality (> 40).

item4

ObjectDType- Null values

- 59,813 (97.7%)

- Unique values

-

98 (0.2%)

This column has a high cardinality (> 40).

item5

ObjectDType- Null values

- 60,366 (98.6%)

- Unique values

-

91 (0.1%)

This column has a high cardinality (> 40).

item6

ObjectDType- Null values

- 60,632 (99.0%)

- Unique values

-

81 (0.1%)

This column has a high cardinality (> 40).

item7

ObjectDType- Null values

- 60,778 (99.2%)

- Unique values

-

76 (0.1%)

This column has a high cardinality (> 40).

item8

ObjectDType- Null values

- 60,874 (99.4%)

- Unique values

-

71 (0.1%)

This column has a high cardinality (> 40).

item9

ObjectDType- Null values

- 60,939 (99.5%)

- Unique values

-

58 (< 0.1%)

This column has a high cardinality (> 40).

item10

ObjectDType- Null values

- 60,995 (99.6%)

- Unique values

-

61 (< 0.1%)

This column has a high cardinality (> 40).

item11

ObjectDType- Null values

- 61,034 (99.7%)

- Unique values

-

49 (< 0.1%)

This column has a high cardinality (> 40).

item12

ObjectDType- Null values

- 61,074 (99.7%)

- Unique values

-

47 (< 0.1%)

This column has a high cardinality (> 40).

item13

ObjectDType- Null values

- 61,105 (99.8%)

- Unique values

-

47 (< 0.1%)

This column has a high cardinality (> 40).

item14

ObjectDType- Null values

- 61,123 (99.8%)

- Unique values

-

44 (< 0.1%)

This column has a high cardinality (> 40).

item15

ObjectDType- Null values

- 61,143 (99.8%)

- Unique values

- 36 (< 0.1%)

item16

ObjectDType- Null values

- 61,160 (99.9%)

- Unique values

- 35 (< 0.1%)

item17

ObjectDType- Null values

- 61,174 (99.9%)

- Unique values

- 29 (< 0.1%)

item18

ObjectDType- Null values

- 61,187 (99.9%)

- Unique values

- 24 (< 0.1%)

item19

ObjectDType- Null values

- 61,194 (99.9%)

- Unique values

- 20 (< 0.1%)

item20

ObjectDType- Null values

- 61,203 (99.9%)

- Unique values

- 22 (< 0.1%)

item21

ObjectDType- Null values

- 61,210 (99.9%)

- Unique values

- 15 (< 0.1%)

item22

ObjectDType- Null values

- 61,219 (100.0%)

- Unique values

- 15 (< 0.1%)

item23

ObjectDType- Null values

- 61,224 (100.0%)

- Unique values

- 11 (< 0.1%)

cash_price0

Int64DType- Null values

- 0 (0.0%)

- Unique values

-

1,102 (1.8%)

This column has a high cardinality (> 40).

- Mean ± Std

- 1.06e+03 ± 679.

- Median ± IQR

- 949 ± 675

- Min | Max

- 2 | 18,349

cash_price1

Float64DType- Null values

- 30,267 (49.4%)

- Unique values

-

689 (1.1%)

This column has a high cardinality (> 40).

- Mean ± Std

- 180. ± 379.

- Median ± IQR

- 30.0 ± 118.

- Min | Max

- 0.00 | 6.50e+03

cash_price2

Float64DType- Null values

- 52,467 (85.7%)

- Unique values

-

511 (0.8%)

This column has a high cardinality (> 40).

- Mean ± Std

- 184. ± 369.

- Median ± IQR

- 30.0 ± 167.

- Min | Max

- 0.00 | 6.00e+03

cash_price3

Float64DType- Null values

- 58,223 (95.1%)

- Unique values

-

372 (0.6%)

This column has a high cardinality (> 40).

- Mean ± Std

- 161. ± 298.

- Median ± IQR

- 40.0 ± 161.

- Min | Max

- 0.00 | 2.85e+03

cash_price4

Float64DType- Null values

- 59,813 (97.7%)

- Unique values

-

283 (0.5%)

This column has a high cardinality (> 40).

- Mean ± Std

- 187. ± 355.

- Median ± IQR

- 52.0 ± 185.

- Min | Max

- 0.00 | 3.60e+03

cash_price5

Float64DType- Null values

- 60,366 (98.6%)

- Unique values

-

216 (0.4%)

This column has a high cardinality (> 40).

- Mean ± Std

- 161. ± 297.

- Median ± IQR

- 50.0 ± 166.

- Min | Max

- 0.00 | 3.00e+03

cash_price6

Float64DType- Null values

- 60,632 (99.0%)

- Unique values

-

173 (0.3%)

This column has a high cardinality (> 40).

- Mean ± Std

- 131. ± 268.

- Median ± IQR

- 50.0 ± 115.

- Min | Max

- 0.00 | 4.20e+03

cash_price7

Float64DType- Null values

- 60,778 (99.2%)

- Unique values

-

142 (0.2%)

This column has a high cardinality (> 40).

- Mean ± Std

- 127. ± 261.

- Median ± IQR

- 45.0 ± 100.

- Min | Max

- 0.00 | 3.00e+03

cash_price8

Float64DType- Null values

- 60,874 (99.4%)

- Unique values

-

138 (0.2%)

This column has a high cardinality (> 40).

- Mean ± Std

- 128. ± 274.

- Median ± IQR

- 44.0 ± 107.

- Min | Max

- 0.00 | 2.40e+03

cash_price9

Float64DType- Null values

- 60,939 (99.5%)

- Unique values

-

104 (0.2%)

This column has a high cardinality (> 40).

- Mean ± Std

- 93.5 ± 178.

- Median ± IQR

- 35.0 ± 72.0

- Min | Max

- 0.00 | 1.40e+03

cash_price10

Float64DType- Null values

- 60,995 (99.6%)

- Unique values

-

97 (0.2%)

This column has a high cardinality (> 40).

- Mean ± Std

- 101. ± 205.

- Median ± IQR

- 30.0 ± 80.0

- Min | Max

- 0.00 | 1.90e+03

cash_price11

Float64DType- Null values

- 61,034 (99.7%)

- Unique values

-

96 (0.2%)

This column has a high cardinality (> 40).

- Mean ± Std

- 107. ± 249.

- Median ± IQR

- 30.0 ± 67.0

- Min | Max

- 0.00 | 2.16e+03

cash_price12

Float64DType- Null values

- 61,074 (99.7%)

- Unique values

-

77 (0.1%)

This column has a high cardinality (> 40).

- Mean ± Std

- 73.3 ± 114.

- Median ± IQR

- 25.0 ± 78.0

- Min | Max

- 0.00 | 799.

cash_price13

Float64DType- Null values

- 61,105 (99.8%)

- Unique values

-

76 (0.1%)

This column has a high cardinality (> 40).

- Mean ± Std

- 93.8 ± 168.

- Median ± IQR

- 39.0 ± 85.0

- Min | Max

- 0.00 | 1.30e+03

cash_price14

Float64DType- Null values

- 61,123 (99.8%)

- Unique values

-

57 (< 0.1%)

This column has a high cardinality (> 40).

- Mean ± Std

- 67.3 ± 101.

- Median ± IQR

- 30.0 ± 58.0

- Min | Max

- 0.00 | 599.

cash_price15

Float64DType- Null values

- 61,143 (99.8%)

- Unique values

-

54 (< 0.1%)

This column has a high cardinality (> 40).

- Mean ± Std

- 88.3 ± 187.

- Median ± IQR

- 34.0 ± 72.0

- Min | Max

- 0.00 | 1.50e+03

cash_price16

Float64DType- Null values

- 61,160 (99.9%)

- Unique values

-

54 (< 0.1%)

This column has a high cardinality (> 40).

- Mean ± Std

- 110. ± 213.

- Median ± IQR

- 40.0 ± 93.0

- Min | Max

- 0.00 | 1.55e+03

cash_price17

Float64DType- Null values

- 61,174 (99.9%)

- Unique values

-

48 (< 0.1%)

This column has a high cardinality (> 40).

- Mean ± Std

- 99.6 ± 154.

- Median ± IQR

- 32.0 ± 116.

- Min | Max

- 0.00 | 799.

cash_price18

Float64DType- Null values

- 61,187 (99.9%)

- Unique values

-

42 (< 0.1%)

This column has a high cardinality (> 40).

- Mean ± Std

- 84.3 ± 152.

- Median ± IQR

- 35.0 ± 75.0

- Min | Max

- 0.00 | 999.

cash_price19

Float64DType- Null values

- 61,194 (99.9%)

- Unique values

- 37 (< 0.1%)

- Mean ± Std

- 98.2 ± 298.

- Median ± IQR

- 30.0 ± 42.0

- Min | Max

- 0.00 | 2.01e+03

cash_price20

Float64DType- Null values

- 61,203 (99.9%)

- Unique values

- 26 (< 0.1%)

- Mean ± Std

- 53.8 ± 88.1

- Median ± IQR

- 18.0 ± 42.0

- Min | Max

- 4.00 | 419.

cash_price21

Float64DType- Null values

- 61,210 (99.9%)

- Unique values

- 25 (< 0.1%)

- Mean ± Std

- 129. ± 378.

- Median ± IQR

- 25.0 ± 57.0

- Min | Max

- 0.00 | 2.09e+03

cash_price22

Float64DType- Null values

- 61,219 (100.0%)

- Unique values

- 21 (< 0.1%)

- Mean ± Std

- 129. ± 213.

- Median ± IQR

- 25.0 ± 93.0

- Min | Max

- 0.00 | 720.

cash_price23

Float64DType- Null values

- 61,224 (100.0%)

- Unique values

- 15 (< 0.1%)

- Mean ± Std

- 136. ± 260.

- Median ± IQR

- 15.0 ± 112.

- Min | Max

- 4.00 | 898.

make0

ObjectDType- Null values

- 685 (1.1%)

- Unique values

-

346 (0.6%)

This column has a high cardinality (> 40).

make1

ObjectDType- Null values

- 30,594 (50.0%)

- Unique values

-

311 (0.5%)

This column has a high cardinality (> 40).

make2

ObjectDType- Null values

- 52,577 (85.9%)

- Unique values

-

289 (0.5%)

This column has a high cardinality (> 40).

make3

ObjectDType- Null values

- 58,257 (95.1%)

- Unique values

-

243 (0.4%)

This column has a high cardinality (> 40).

make4

ObjectDType- Null values

- 59,832 (97.7%)

- Unique values

-

202 (0.3%)

This column has a high cardinality (> 40).

make5

ObjectDType- Null values

- 60,379 (98.6%)

- Unique values

-

149 (0.2%)

This column has a high cardinality (> 40).

make6

ObjectDType- Null values

- 60,642 (99.0%)

- Unique values

-

131 (0.2%)

This column has a high cardinality (> 40).

make7

ObjectDType- Null values

- 60,788 (99.3%)

- Unique values

-

122 (0.2%)

This column has a high cardinality (> 40).

make8

ObjectDType- Null values

- 60,884 (99.4%)

- Unique values

-

102 (0.2%)

This column has a high cardinality (> 40).

make9

ObjectDType- Null values

- 60,949 (99.5%)

- Unique values

-

85 (0.1%)

This column has a high cardinality (> 40).

make10

ObjectDType- Null values

- 61,003 (99.6%)

- Unique values

-

79 (0.1%)

This column has a high cardinality (> 40).

make11

ObjectDType- Null values

- 61,042 (99.7%)

- Unique values

-

67 (0.1%)

This column has a high cardinality (> 40).

make12

ObjectDType- Null values

- 61,081 (99.7%)

- Unique values

-

52 (< 0.1%)

This column has a high cardinality (> 40).

make13

ObjectDType- Null values

- 61,110 (99.8%)

- Unique values

-

53 (< 0.1%)

This column has a high cardinality (> 40).

make14

ObjectDType- Null values

- 61,128 (99.8%)

- Unique values

-

46 (< 0.1%)

This column has a high cardinality (> 40).

make15

ObjectDType- Null values

- 61,146 (99.8%)

- Unique values

- 35 (< 0.1%)

make16

ObjectDType- Null values

- 61,163 (99.9%)

- Unique values

- 31 (< 0.1%)

make17

ObjectDType- Null values

- 61,175 (99.9%)

- Unique values

- 22 (< 0.1%)

make18

ObjectDType- Null values

- 61,188 (99.9%)

- Unique values

- 25 (< 0.1%)

make19

ObjectDType- Null values

- 61,195 (99.9%)

- Unique values

- 20 (< 0.1%)

make20

ObjectDType- Null values

- 61,204 (99.9%)

- Unique values

- 17 (< 0.1%)

make21

ObjectDType- Null values

- 61,211 (100.0%)

- Unique values

- 12 (< 0.1%)

make22

ObjectDType- Null values

- 61,220 (100.0%)

- Unique values

- 12 (< 0.1%)

make23

ObjectDType- Null values

- 61,224 (100.0%)

- Unique values

- 9 (< 0.1%)

model0

ObjectDType- Null values

- 685 (1.1%)

- Unique values

-

2,624 (4.3%)

This column has a high cardinality (> 40).

model1

ObjectDType- Null values

- 30,594 (50.0%)

- Unique values

-

2,101 (3.4%)

This column has a high cardinality (> 40).

model2

ObjectDType- Null values

- 52,577 (85.9%)

- Unique values

-

1,507 (2.5%)

This column has a high cardinality (> 40).

model3

ObjectDType- Null values

- 58,257 (95.1%)

- Unique values

-

983 (1.6%)

This column has a high cardinality (> 40).

model4

ObjectDType- Null values

- 59,832 (97.7%)

- Unique values

-

658 (1.1%)

This column has a high cardinality (> 40).

model5

ObjectDType- Null values

- 60,379 (98.6%)

- Unique values

-

437 (0.7%)

This column has a high cardinality (> 40).

model6

ObjectDType- Null values

- 60,642 (99.0%)

- Unique values

-

333 (0.5%)

This column has a high cardinality (> 40).

model7

ObjectDType- Null values

- 60,788 (99.3%)

- Unique values

-

263 (0.4%)

This column has a high cardinality (> 40).

model8

ObjectDType- Null values

- 60,884 (99.4%)

- Unique values

-

212 (0.3%)

This column has a high cardinality (> 40).

model9

ObjectDType- Null values

- 60,949 (99.5%)

- Unique values

-

184 (0.3%)

This column has a high cardinality (> 40).

model10

ObjectDType- Null values

- 61,003 (99.6%)

- Unique values

-

147 (0.2%)

This column has a high cardinality (> 40).

model11

ObjectDType- Null values

- 61,042 (99.7%)

- Unique values

-

122 (0.2%)

This column has a high cardinality (> 40).

model12

ObjectDType- Null values

- 61,081 (99.7%)

- Unique values

-

99 (0.2%)

This column has a high cardinality (> 40).

model13

ObjectDType- Null values

- 61,110 (99.8%)

- Unique values

-

81 (0.1%)

This column has a high cardinality (> 40).

model14

ObjectDType- Null values

- 61,128 (99.8%)

- Unique values

-

73 (0.1%)

This column has a high cardinality (> 40).

model15

ObjectDType- Null values

- 61,146 (99.8%)

- Unique values

-

60 (< 0.1%)

This column has a high cardinality (> 40).

model16

ObjectDType- Null values

- 61,163 (99.9%)

- Unique values

-

53 (< 0.1%)

This column has a high cardinality (> 40).

model17

ObjectDType- Null values

- 61,175 (99.9%)

- Unique values

-

41 (< 0.1%)

This column has a high cardinality (> 40).

model18

ObjectDType- Null values

- 61,188 (99.9%)

- Unique values

- 36 (< 0.1%)

model19

ObjectDType- Null values

- 61,195 (99.9%)

- Unique values

- 33 (< 0.1%)

model20

ObjectDType- Null values

- 61,204 (99.9%)

- Unique values

- 25 (< 0.1%)

model21

ObjectDType- Null values

- 61,211 (100.0%)

- Unique values

- 23 (< 0.1%)

model22

ObjectDType- Null values

- 61,220 (100.0%)

- Unique values

- 18 (< 0.1%)

model23

ObjectDType- Null values

- 61,224 (100.0%)

- Unique values

- 16 (< 0.1%)

goods_code0

ObjectDType- Null values

- 0 (0.0%)

- Unique values

-

4,404 (7.2%)

This column has a high cardinality (> 40).

goods_code1

ObjectDType- Null values

- 30,267 (49.4%)

- Unique values

-

3,351 (5.5%)

This column has a high cardinality (> 40).

goods_code2

ObjectDType- Null values

- 52,467 (85.7%)

- Unique values

-

2,246 (3.7%)

This column has a high cardinality (> 40).

goods_code3

ObjectDType- Null values

- 58,223 (95.1%)

- Unique values

-

1,436 (2.3%)

This column has a high cardinality (> 40).

goods_code4

ObjectDType- Null values

- 59,813 (97.7%)

- Unique values

-

989 (1.6%)

This column has a high cardinality (> 40).

goods_code5

ObjectDType- Null values

- 60,366 (98.6%)

- Unique values

-

661 (1.1%)

This column has a high cardinality (> 40).

goods_code6

ObjectDType- Null values

- 60,632 (99.0%)

- Unique values

-

516 (0.8%)

This column has a high cardinality (> 40).

goods_code7

ObjectDType- Null values

- 60,778 (99.2%)

- Unique values

-

396 (0.6%)

This column has a high cardinality (> 40).

goods_code8

ObjectDType- Null values

- 60,874 (99.4%)

- Unique values

-

332 (0.5%)

This column has a high cardinality (> 40).

goods_code9

ObjectDType- Null values

- 60,939 (99.5%)

- Unique values

-

269 (0.4%)

This column has a high cardinality (> 40).

goods_code10

ObjectDType- Null values

- 60,995 (99.6%)

- Unique values

-

222 (0.4%)

This column has a high cardinality (> 40).

goods_code11

ObjectDType- Null values

- 61,034 (99.7%)

- Unique values

-

183 (0.3%)

This column has a high cardinality (> 40).

goods_code12

ObjectDType- Null values

- 61,074 (99.7%)

- Unique values

-

153 (0.2%)

This column has a high cardinality (> 40).

goods_code13

ObjectDType- Null values

- 61,105 (99.8%)

- Unique values

-

126 (0.2%)

This column has a high cardinality (> 40).

goods_code14

ObjectDType- Null values

- 61,123 (99.8%)

- Unique values

-

106 (0.2%)

This column has a high cardinality (> 40).

goods_code15

ObjectDType- Null values

- 61,143 (99.8%)

- Unique values

-

91 (0.1%)

This column has a high cardinality (> 40).

goods_code16

ObjectDType- Null values

- 61,160 (99.9%)

- Unique values

-

76 (0.1%)

This column has a high cardinality (> 40).

goods_code17

ObjectDType- Null values

- 61,174 (99.9%)

- Unique values

-

60 (< 0.1%)

This column has a high cardinality (> 40).

goods_code18

ObjectDType- Null values

- 61,187 (99.9%)

- Unique values

-

50 (< 0.1%)

This column has a high cardinality (> 40).

goods_code19

ObjectDType- Null values

- 61,194 (99.9%)

- Unique values

-

43 (< 0.1%)

This column has a high cardinality (> 40).

goods_code20

ObjectDType- Null values

- 61,203 (99.9%)

- Unique values

- 33 (< 0.1%)

goods_code21

ObjectDType- Null values

- 61,210 (99.9%)

- Unique values

- 27 (< 0.1%)

goods_code22

ObjectDType- Null values

- 61,219 (100.0%)

- Unique values

- 19 (< 0.1%)

goods_code23

ObjectDType- Null values

- 61,224 (100.0%)

- Unique values

- 16 (< 0.1%)

Nbr_of_prod_purchas0

Int64DType- Null values

- 0 (0.0%)

- Unique values

- 14 (< 0.1%)

- Mean ± Std

- 1.03 ± 0.365

- Median ± IQR

- 1 ± 0

- Min | Max

- 1 | 40

Nbr_of_prod_purchas1

Float64DType- Null values

- 30,267 (49.4%)

- Unique values

- 10 (< 0.1%)

- Mean ± Std

- 1.03 ± 0.265

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 10.0

Nbr_of_prod_purchas2

Float64DType- Null values

- 52,467 (85.7%)

- Unique values

- 10 (< 0.1%)

- Mean ± Std

- 1.07 ± 0.427

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 15.0

Nbr_of_prod_purchas3

Float64DType- Null values

- 58,223 (95.1%)

- Unique values

- 13 (< 0.1%)

- Mean ± Std

- 1.16 ± 0.876

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 28.0

Nbr_of_prod_purchas4

Float64DType- Null values

- 59,813 (97.7%)

- Unique values

- 9 (< 0.1%)

- Mean ± Std

- 1.24 ± 0.827

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 15.0

Nbr_of_prod_purchas5

Float64DType- Null values

- 60,366 (98.6%)

- Unique values

- 8 (< 0.1%)

- Mean ± Std

- 1.25 ± 1.09

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 24.0

Nbr_of_prod_purchas6

Float64DType- Null values

- 60,632 (99.0%)

- Unique values

- 9 (< 0.1%)

- Mean ± Std

- 1.28 ± 0.812

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 9.00

Nbr_of_prod_purchas7

Float64DType- Null values

- 60,778 (99.2%)

- Unique values

- 9 (< 0.1%)

- Mean ± Std

- 1.34 ± 1.09

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 14.0

Nbr_of_prod_purchas8

Float64DType- Null values

- 60,874 (99.4%)

- Unique values

- 10 (< 0.1%)

- Mean ± Std

- 1.42 ± 1.16

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 10.0

Nbr_of_prod_purchas9

Float64DType- Null values

- 60,939 (99.5%)

- Unique values

- 7 (< 0.1%)

- Mean ± Std

- 1.35 ± 0.920

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 8.00

Nbr_of_prod_purchas10

Float64DType- Null values

- 60,995 (99.6%)

- Unique values

- 9 (< 0.1%)

- Mean ± Std

- 1.39 ± 1.19

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 12.0

Nbr_of_prod_purchas11

Float64DType- Null values

- 61,034 (99.7%)

- Unique values

- 6 (< 0.1%)

- Mean ± Std

- 1.30 ± 0.853

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 6.00

Nbr_of_prod_purchas12

Float64DType- Null values

- 61,074 (99.7%)

- Unique values

- 4 (< 0.1%)

- Mean ± Std

- 1.19 ± 0.465

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 4.00

Nbr_of_prod_purchas13

Float64DType- Null values

- 61,105 (99.8%)

- Unique values

- 6 (< 0.1%)

- Mean ± Std

- 1.37 ± 1.18

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 12.0

Nbr_of_prod_purchas14

Float64DType- Null values

- 61,123 (99.8%)

- Unique values

- 6 (< 0.1%)

- Mean ± Std

- 1.32 ± 0.866

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 6.00

Nbr_of_prod_purchas15

Float64DType- Null values

- 61,143 (99.8%)

- Unique values

- 6 (< 0.1%)

- Mean ± Std

- 1.32 ± 0.807

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 6.00

Nbr_of_prod_purchas16

Float64DType- Null values

- 61,160 (99.9%)

- Unique values

- 5 (< 0.1%)

- Mean ± Std

- 1.47 ± 1.38

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 12.0

Nbr_of_prod_purchas17

Float64DType- Null values

- 61,174 (99.9%)

- Unique values

- 4 (< 0.1%)

- Mean ± Std

- 1.39 ± 1.87

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 16.0

Nbr_of_prod_purchas18

Float64DType- Null values

- 61,187 (99.9%)

- Unique values

- 4 (< 0.1%)

- Mean ± Std

- 1.20 ± 0.810

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 6.00

Nbr_of_prod_purchas19

Float64DType- Null values

- 61,194 (99.9%)

- Unique values

- 5 (< 0.1%)

- Mean ± Std

- 1.40 ± 1.06

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 7.00

Nbr_of_prod_purchas20

Float64DType- Null values

- 61,203 (99.9%)

- Unique values

- 3 (< 0.1%)

- Mean ± Std

- 1.18 ± 0.563

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 4.00

Nbr_of_prod_purchas21

Float64DType- Null values

- 61,210 (99.9%)

- Unique values

- 4 (< 0.1%)

- Mean ± Std

- 1.48 ± 1.21

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 7.00

Nbr_of_prod_purchas22

Float64DType- Null values

- 61,219 (100.0%)

- Unique values

- 4 (< 0.1%)

- Mean ± Std

- 1.27 ± 0.767

- Median ± IQR

- 1.00 ± 0.00

- Min | Max

- 1.00 | 4.00

Nbr_of_prod_purchas23

Float64DType- Null values

- 61,224 (100.0%)

- Unique values

- 2 (< 0.1%)

- Mean ± Std

- 1.29 ± 0.470

- Median ± IQR

- 1.00 ± 1.00

- Min | Max

- 1.00 | 2.00

No columns match the selected filter: . You can change the column filter in the dropdown menu above.

|

Column

|

Column name

|

dtype

|

Is sorted

|

Null values

|

Unique values

|

Mean

|

Std

|

Min

|

Median

|

Max

|

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | basket_ID | Int64DType | True | 0 (0.0%) | 61241 (100.0%) | 3.82e+04 | 2.21e+04 | 0 | 38,158 | 76,543 |

| 1 | item0 | ObjectDType | False | 0 (0.0%) | 133 (0.2%) | |||||

| 2 | item1 | ObjectDType | False | 30267 (49.4%) | 130 (0.2%) | |||||

| 3 | item2 | ObjectDType | False | 52467 (85.7%) | 121 (0.2%) | |||||

| 4 | item3 | ObjectDType | False | 58223 (95.1%) | 119 (0.2%) | |||||

| 5 | item4 | ObjectDType | False | 59813 (97.7%) | 98 (0.2%) | |||||

| 6 | item5 | ObjectDType | False | 60366 (98.6%) | 91 (0.1%) | |||||

| 7 | item6 | ObjectDType | False | 60632 (99.0%) | 81 (0.1%) | |||||

| 8 | item7 | ObjectDType | False | 60778 (99.2%) | 76 (0.1%) | |||||

| 9 | item8 | ObjectDType | False | 60874 (99.4%) | 71 (0.1%) | |||||

| 10 | item9 | ObjectDType | False | 60939 (99.5%) | 58 (< 0.1%) | |||||

| 11 | item10 | ObjectDType | False | 60995 (99.6%) | 61 (< 0.1%) | |||||

| 12 | item11 | ObjectDType | False | 61034 (99.7%) | 49 (< 0.1%) | |||||

| 13 | item12 | ObjectDType | False | 61074 (99.7%) | 47 (< 0.1%) | |||||

| 14 | item13 | ObjectDType | False | 61105 (99.8%) | 47 (< 0.1%) | |||||

| 15 | item14 | ObjectDType | False | 61123 (99.8%) | 44 (< 0.1%) | |||||

| 16 | item15 | ObjectDType | False | 61143 (99.8%) | 36 (< 0.1%) | |||||

| 17 | item16 | ObjectDType | False | 61160 (99.9%) | 35 (< 0.1%) | |||||

| 18 | item17 | ObjectDType | False | 61174 (99.9%) | 29 (< 0.1%) | |||||

| 19 | item18 | ObjectDType | False | 61187 (99.9%) | 24 (< 0.1%) | |||||

| 20 | item19 | ObjectDType | False | 61194 (99.9%) | 20 (< 0.1%) | |||||

| 21 | item20 | ObjectDType | False | 61203 (99.9%) | 22 (< 0.1%) | |||||

| 22 | item21 | ObjectDType | False | 61210 (99.9%) | 15 (< 0.1%) | |||||

| 23 | item22 | ObjectDType | False | 61219 (100.0%) | 15 (< 0.1%) | |||||

| 24 | item23 | ObjectDType | False | 61224 (100.0%) | 11 (< 0.1%) | |||||

| 25 | cash_price0 | Int64DType | False | 0 (0.0%) | 1102 (1.8%) | 1.06e+03 | 679. | 2 | 949 | 18,349 |

| 26 | cash_price1 | Float64DType | False | 30267 (49.4%) | 689 (1.1%) | 180. | 379. | 0.00 | 30.0 | 6.50e+03 |

| 27 | cash_price2 | Float64DType | False | 52467 (85.7%) | 511 (0.8%) | 184. | 369. | 0.00 | 30.0 | 6.00e+03 |

| 28 | cash_price3 | Float64DType | False | 58223 (95.1%) | 372 (0.6%) | 161. | 298. | 0.00 | 40.0 | 2.85e+03 |

| 29 | cash_price4 | Float64DType | False | 59813 (97.7%) | 283 (0.5%) | 187. | 355. | 0.00 | 52.0 | 3.60e+03 |

| 30 | cash_price5 | Float64DType | False | 60366 (98.6%) | 216 (0.4%) | 161. | 297. | 0.00 | 50.0 | 3.00e+03 |

| 31 | cash_price6 | Float64DType | False | 60632 (99.0%) | 173 (0.3%) | 131. | 268. | 0.00 | 50.0 | 4.20e+03 |

| 32 | cash_price7 | Float64DType | False | 60778 (99.2%) | 142 (0.2%) | 127. | 261. | 0.00 | 45.0 | 3.00e+03 |

| 33 | cash_price8 | Float64DType | False | 60874 (99.4%) | 138 (0.2%) | 128. | 274. | 0.00 | 44.0 | 2.40e+03 |

| 34 | cash_price9 | Float64DType | False | 60939 (99.5%) | 104 (0.2%) | 93.5 | 178. | 0.00 | 35.0 | 1.40e+03 |

| 35 | cash_price10 | Float64DType | False | 60995 (99.6%) | 97 (0.2%) | 101. | 205. | 0.00 | 30.0 | 1.90e+03 |

| 36 | cash_price11 | Float64DType | False | 61034 (99.7%) | 96 (0.2%) | 107. | 249. | 0.00 | 30.0 | 2.16e+03 |

| 37 | cash_price12 | Float64DType | False | 61074 (99.7%) | 77 (0.1%) | 73.3 | 114. | 0.00 | 25.0 | 799. |

| 38 | cash_price13 | Float64DType | False | 61105 (99.8%) | 76 (0.1%) | 93.8 | 168. | 0.00 | 39.0 | 1.30e+03 |

| 39 | cash_price14 | Float64DType | False | 61123 (99.8%) | 57 (< 0.1%) | 67.3 | 101. | 0.00 | 30.0 | 599. |

| 40 | cash_price15 | Float64DType | False | 61143 (99.8%) | 54 (< 0.1%) | 88.3 | 187. | 0.00 | 34.0 | 1.50e+03 |

| 41 | cash_price16 | Float64DType | False | 61160 (99.9%) | 54 (< 0.1%) | 110. | 213. | 0.00 | 40.0 | 1.55e+03 |

| 42 | cash_price17 | Float64DType | False | 61174 (99.9%) | 48 (< 0.1%) | 99.6 | 154. | 0.00 | 32.0 | 799. |

| 43 | cash_price18 | Float64DType | False | 61187 (99.9%) | 42 (< 0.1%) | 84.3 | 152. | 0.00 | 35.0 | 999. |

| 44 | cash_price19 | Float64DType | False | 61194 (99.9%) | 37 (< 0.1%) | 98.2 | 298. | 0.00 | 30.0 | 2.01e+03 |

| 45 | cash_price20 | Float64DType | False | 61203 (99.9%) | 26 (< 0.1%) | 53.8 | 88.1 | 4.00 | 18.0 | 419. |

| 46 | cash_price21 | Float64DType | False | 61210 (99.9%) | 25 (< 0.1%) | 129. | 378. | 0.00 | 25.0 | 2.09e+03 |

| 47 | cash_price22 | Float64DType | False | 61219 (100.0%) | 21 (< 0.1%) | 129. | 213. | 0.00 | 25.0 | 720. |

| 48 | cash_price23 | Float64DType | False | 61224 (100.0%) | 15 (< 0.1%) | 136. | 260. | 4.00 | 15.0 | 898. |

| 49 | make0 | ObjectDType | False | 685 (1.1%) | 346 (0.6%) | |||||

| 50 | make1 | ObjectDType | False | 30594 (50.0%) | 311 (0.5%) | |||||

| 51 | make2 | ObjectDType | False | 52577 (85.9%) | 289 (0.5%) | |||||

| 52 | make3 | ObjectDType | False | 58257 (95.1%) | 243 (0.4%) | |||||

| 53 | make4 | ObjectDType | False | 59832 (97.7%) | 202 (0.3%) | |||||

| 54 | make5 | ObjectDType | False | 60379 (98.6%) | 149 (0.2%) | |||||

| 55 | make6 | ObjectDType | False | 60642 (99.0%) | 131 (0.2%) | |||||

| 56 | make7 | ObjectDType | False | 60788 (99.3%) | 122 (0.2%) | |||||

| 57 | make8 | ObjectDType | False | 60884 (99.4%) | 102 (0.2%) | |||||

| 58 | make9 | ObjectDType | False | 60949 (99.5%) | 85 (0.1%) | |||||

| 59 | make10 | ObjectDType | False | 61003 (99.6%) | 79 (0.1%) | |||||

| 60 | make11 | ObjectDType | False | 61042 (99.7%) | 67 (0.1%) | |||||

| 61 | make12 | ObjectDType | False | 61081 (99.7%) | 52 (< 0.1%) | |||||

| 62 | make13 | ObjectDType | False | 61110 (99.8%) | 53 (< 0.1%) | |||||

| 63 | make14 | ObjectDType | False | 61128 (99.8%) | 46 (< 0.1%) | |||||

| 64 | make15 | ObjectDType | False | 61146 (99.8%) | 35 (< 0.1%) | |||||

| 65 | make16 | ObjectDType | False | 61163 (99.9%) | 31 (< 0.1%) | |||||

| 66 | make17 | ObjectDType | False | 61175 (99.9%) | 22 (< 0.1%) | |||||

| 67 | make18 | ObjectDType | False | 61188 (99.9%) | 25 (< 0.1%) | |||||

| 68 | make19 | ObjectDType | False | 61195 (99.9%) | 20 (< 0.1%) | |||||

| 69 | make20 | ObjectDType | False | 61204 (99.9%) | 17 (< 0.1%) | |||||

| 70 | make21 | ObjectDType | False | 61211 (100.0%) | 12 (< 0.1%) | |||||

| 71 | make22 | ObjectDType | False | 61220 (100.0%) | 12 (< 0.1%) | |||||

| 72 | make23 | ObjectDType | False | 61224 (100.0%) | 9 (< 0.1%) | |||||

| 73 | model0 | ObjectDType | False | 685 (1.1%) | 2624 (4.3%) | |||||

| 74 | model1 | ObjectDType | False | 30594 (50.0%) | 2101 (3.4%) | |||||

| 75 | model2 | ObjectDType | False | 52577 (85.9%) | 1507 (2.5%) | |||||

| 76 | model3 | ObjectDType | False | 58257 (95.1%) | 983 (1.6%) | |||||

| 77 | model4 | ObjectDType | False | 59832 (97.7%) | 658 (1.1%) | |||||

| 78 | model5 | ObjectDType | False | 60379 (98.6%) | 437 (0.7%) | |||||

| 79 | model6 | ObjectDType | False | 60642 (99.0%) | 333 (0.5%) | |||||

| 80 | model7 | ObjectDType | False | 60788 (99.3%) | 263 (0.4%) | |||||

| 81 | model8 | ObjectDType | False | 60884 (99.4%) | 212 (0.3%) | |||||

| 82 | model9 | ObjectDType | False | 60949 (99.5%) | 184 (0.3%) | |||||

| 83 | model10 | ObjectDType | False | 61003 (99.6%) | 147 (0.2%) | |||||

| 84 | model11 | ObjectDType | False | 61042 (99.7%) | 122 (0.2%) | |||||

| 85 | model12 | ObjectDType | False | 61081 (99.7%) | 99 (0.2%) | |||||

| 86 | model13 | ObjectDType | False | 61110 (99.8%) | 81 (0.1%) | |||||

| 87 | model14 | ObjectDType | False | 61128 (99.8%) | 73 (0.1%) | |||||

| 88 | model15 | ObjectDType | False | 61146 (99.8%) | 60 (< 0.1%) | |||||

| 89 | model16 | ObjectDType | False | 61163 (99.9%) | 53 (< 0.1%) | |||||

| 90 | model17 | ObjectDType | False | 61175 (99.9%) | 41 (< 0.1%) | |||||

| 91 | model18 | ObjectDType | False | 61188 (99.9%) | 36 (< 0.1%) | |||||

| 92 | model19 | ObjectDType | False | 61195 (99.9%) | 33 (< 0.1%) | |||||

| 93 | model20 | ObjectDType | False | 61204 (99.9%) | 25 (< 0.1%) | |||||

| 94 | model21 | ObjectDType | False | 61211 (100.0%) | 23 (< 0.1%) | |||||

| 95 | model22 | ObjectDType | False | 61220 (100.0%) | 18 (< 0.1%) | |||||

| 96 | model23 | ObjectDType | False | 61224 (100.0%) | 16 (< 0.1%) | |||||

| 97 | goods_code0 | ObjectDType | False | 0 (0.0%) | 4404 (7.2%) | |||||

| 98 | goods_code1 | ObjectDType | False | 30267 (49.4%) | 3351 (5.5%) | |||||

| 99 | goods_code2 | ObjectDType | False | 52467 (85.7%) | 2246 (3.7%) | |||||

| 100 | goods_code3 | ObjectDType | False | 58223 (95.1%) | 1436 (2.3%) | |||||

| 101 | goods_code4 | ObjectDType | False | 59813 (97.7%) | 989 (1.6%) | |||||

| 102 | goods_code5 | ObjectDType | False | 60366 (98.6%) | 661 (1.1%) | |||||

| 103 | goods_code6 | ObjectDType | False | 60632 (99.0%) | 516 (0.8%) | |||||

| 104 | goods_code7 | ObjectDType | False | 60778 (99.2%) | 396 (0.6%) | |||||

| 105 | goods_code8 | ObjectDType | False | 60874 (99.4%) | 332 (0.5%) | |||||

| 106 | goods_code9 | ObjectDType | False | 60939 (99.5%) | 269 (0.4%) | |||||

| 107 | goods_code10 | ObjectDType | False | 60995 (99.6%) | 222 (0.4%) | |||||

| 108 | goods_code11 | ObjectDType | False | 61034 (99.7%) | 183 (0.3%) | |||||

| 109 | goods_code12 | ObjectDType | False | 61074 (99.7%) | 153 (0.2%) | |||||

| 110 | goods_code13 | ObjectDType | False | 61105 (99.8%) | 126 (0.2%) | |||||

| 111 | goods_code14 | ObjectDType | False | 61123 (99.8%) | 106 (0.2%) | |||||

| 112 | goods_code15 | ObjectDType | False | 61143 (99.8%) | 91 (0.1%) | |||||

| 113 | goods_code16 | ObjectDType | False | 61160 (99.9%) | 76 (0.1%) | |||||

| 114 | goods_code17 | ObjectDType | False | 61174 (99.9%) | 60 (< 0.1%) | |||||

| 115 | goods_code18 | ObjectDType | False | 61187 (99.9%) | 50 (< 0.1%) | |||||

| 116 | goods_code19 | ObjectDType | False | 61194 (99.9%) | 43 (< 0.1%) | |||||

| 117 | goods_code20 | ObjectDType | False | 61203 (99.9%) | 33 (< 0.1%) | |||||

| 118 | goods_code21 | ObjectDType | False | 61210 (99.9%) | 27 (< 0.1%) | |||||

| 119 | goods_code22 | ObjectDType | False | 61219 (100.0%) | 19 (< 0.1%) | |||||

| 120 | goods_code23 | ObjectDType | False | 61224 (100.0%) | 16 (< 0.1%) | |||||

| 121 | Nbr_of_prod_purchas0 | Int64DType | False | 0 (0.0%) | 14 (< 0.1%) | 1.03 | 0.365 | 1 | 1 | 40 |

| 122 | Nbr_of_prod_purchas1 | Float64DType | False | 30267 (49.4%) | 10 (< 0.1%) | 1.03 | 0.265 | 1.00 | 1.00 | 10.0 |

| 123 | Nbr_of_prod_purchas2 | Float64DType | False | 52467 (85.7%) | 10 (< 0.1%) | 1.07 | 0.427 | 1.00 | 1.00 | 15.0 |

| 124 | Nbr_of_prod_purchas3 | Float64DType | False | 58223 (95.1%) | 13 (< 0.1%) | 1.16 | 0.876 | 1.00 | 1.00 | 28.0 |

| 125 | Nbr_of_prod_purchas4 | Float64DType | False | 59813 (97.7%) | 9 (< 0.1%) | 1.24 | 0.827 | 1.00 | 1.00 | 15.0 |

| 126 | Nbr_of_prod_purchas5 | Float64DType | False | 60366 (98.6%) | 8 (< 0.1%) | 1.25 | 1.09 | 1.00 | 1.00 | 24.0 |

| 127 | Nbr_of_prod_purchas6 | Float64DType | False | 60632 (99.0%) | 9 (< 0.1%) | 1.28 | 0.812 | 1.00 | 1.00 | 9.00 |

| 128 | Nbr_of_prod_purchas7 | Float64DType | False | 60778 (99.2%) | 9 (< 0.1%) | 1.34 | 1.09 | 1.00 | 1.00 | 14.0 |

| 129 | Nbr_of_prod_purchas8 | Float64DType | False | 60874 (99.4%) | 10 (< 0.1%) | 1.42 | 1.16 | 1.00 | 1.00 | 10.0 |

| 130 | Nbr_of_prod_purchas9 | Float64DType | False | 60939 (99.5%) | 7 (< 0.1%) | 1.35 | 0.920 | 1.00 | 1.00 | 8.00 |

| 131 | Nbr_of_prod_purchas10 | Float64DType | False | 60995 (99.6%) | 9 (< 0.1%) | 1.39 | 1.19 | 1.00 | 1.00 | 12.0 |

| 132 | Nbr_of_prod_purchas11 | Float64DType | False | 61034 (99.7%) | 6 (< 0.1%) | 1.30 | 0.853 | 1.00 | 1.00 | 6.00 |

| 133 | Nbr_of_prod_purchas12 | Float64DType | False | 61074 (99.7%) | 4 (< 0.1%) | 1.19 | 0.465 | 1.00 | 1.00 | 4.00 |

| 134 | Nbr_of_prod_purchas13 | Float64DType | False | 61105 (99.8%) | 6 (< 0.1%) | 1.37 | 1.18 | 1.00 | 1.00 | 12.0 |

| 135 | Nbr_of_prod_purchas14 | Float64DType | False | 61123 (99.8%) | 6 (< 0.1%) | 1.32 | 0.866 | 1.00 | 1.00 | 6.00 |

| 136 | Nbr_of_prod_purchas15 | Float64DType | False | 61143 (99.8%) | 6 (< 0.1%) | 1.32 | 0.807 | 1.00 | 1.00 | 6.00 |

| 137 | Nbr_of_prod_purchas16 | Float64DType | False | 61160 (99.9%) | 5 (< 0.1%) | 1.47 | 1.38 | 1.00 | 1.00 | 12.0 |

| 138 | Nbr_of_prod_purchas17 | Float64DType | False | 61174 (99.9%) | 4 (< 0.1%) | 1.39 | 1.87 | 1.00 | 1.00 | 16.0 |

| 139 | Nbr_of_prod_purchas18 | Float64DType | False | 61187 (99.9%) | 4 (< 0.1%) | 1.20 | 0.810 | 1.00 | 1.00 | 6.00 |

| 140 | Nbr_of_prod_purchas19 | Float64DType | False | 61194 (99.9%) | 5 (< 0.1%) | 1.40 | 1.06 | 1.00 | 1.00 | 7.00 |

| 141 | Nbr_of_prod_purchas20 | Float64DType | False | 61203 (99.9%) | 3 (< 0.1%) | 1.18 | 0.563 | 1.00 | 1.00 | 4.00 |

| 142 | Nbr_of_prod_purchas21 | Float64DType | False | 61210 (99.9%) | 4 (< 0.1%) | 1.48 | 1.21 | 1.00 | 1.00 | 7.00 |

| 143 | Nbr_of_prod_purchas22 | Float64DType | False | 61219 (100.0%) | 4 (< 0.1%) | 1.27 | 0.767 | 1.00 | 1.00 | 4.00 |

| 144 | Nbr_of_prod_purchas23 | Float64DType | False | 61224 (100.0%) | 2 (< 0.1%) | 1.29 | 0.470 | 1.00 | 1.00 | 2.00 |

No columns match the selected filter: . You can change the column filter in the dropdown menu above.

max_plot_columns

limit set for the TableReport during report creation.

No columns match the selected filter: . You can change the column filter in the dropdown menu above.

max_association_columns parameter.

Please enable javascript

The skrub table reports need javascript to display correctly. If you are displaying a report in a Jupyter notebook and you see this message, you may need to re-execute the cell or to trust the notebook (button on the top right or "File > Trust notebook").

Look at the “Stats” section of the TableReport above. Does anything strike you?

Not only did we create 144 columns, but most of these columns are filled with NaN, which is very inefficient for learning!

This is because each basket contains a variable number of products, up to 24, and we created one column for each product attribute, for each position (up to 24) in the dataframe.

Moreover, if we wanted to replace text columns with encodings, we would create

\(d \times 24 \times 2\) columns (encoding of dimensionality \(d\), for

24 products, for the "item" and "make" columns), which would explode the

memory usage.

AggJoiner#

Let’s now see how the AggJoiner can help us solve this. We begin with splitting our

basket dataset in a training and testing set.

from sklearn.model_selection import train_test_split

X, y = baskets[["ID"]], baskets["fraud_flag"]

X_train, X_test, y_train, y_test = train_test_split(X, y, stratify=y, test_size=0.1)

X_train.shape, y_train.shape

((55116, 1), (55116,))