TextEncoder#

- class skrub.TextEncoder(model_name='intfloat/e5-small-v2', n_components=30, device=None, batch_size=32, token_env_variable=None, cache_folder=None, store_weights_in_pickle=False, random_state=None, verbose=False)[source]#

Encode string features by applying a pretrained language model downloaded from the HuggingFace Hub.

Note

TextEncoderis a type of single-column transformer. Unlike most scikit-learn estimators, itsfit,transformandfit_transformmethods expect a single column (a pandas or polars Series) rather than a full dataframe. To apply this transformer to one or more columns in a dataframe, use it as a parameter in askrub.ApplyToColsor askrub.TableVectorizer.To apply to all columns:

ApplyToCol(TextEncoder())

To apply to selected columns:

ApplyToCols(TextEncoder(), cols=['col_name_1', 'col_name_2'])

This is a thin wrapper around

SentenceTransformerthat follows the scikit-learn API, making it usable within a scikit-learn pipeline.Warning

To use this class, you need to install the optional

transformersdependencies for skrub. See the “deep learning dependencies” section in the Install guide for more details.- Parameters:

- model_name

str, default=”intfloat/e5-small-v2” If a filepath on disk is passed, this class loads the model from that path.

Otherwise, it first tries to download a pre-trained

SentenceTransformermodel. If that fails, tries to construct a model from Huggingface models repository with that name.

The following models have a good performance/memory usage tradeoff:

intfloat/e5-small-v2all-MiniLM-L6-v2all-mpnet-base-v2

You can find more options on the sentence-transformers documentation.

The default model is a shrunk version of e5-v2, which has shown good performance in the benchmark of [1].

- n_components

intorNone, default=30, The number of embedding dimensions. As the number of dimensions is different across embedding models, this class uses a

PCAto set the number of embedding ton_componentsduringtransform. Setn_components=Noneto skip the PCA dimension reduction mechanism.See [1] for more details on the choice of the PCA and default

n_components.- device

str, default=None Device (e.g. “cpu”, “cuda”, “mps”) that should be used for computation. If None, checks if a GPU can be used. Note that macOS ARM64 users can enable the GPU on their local machine by setting

device="mps".- batch_size

int, default=32 The batch size to use during

transform.- token_env_variable

str, default=None The name of the environment variable which stores your HuggingFace authentication token to download private models. Note that we only store the name of the variable but not the token itself.

- cache_folder

str, default=None Path to store models. By default

~/skrub_data. Seeskrub.datasets._utils.get_data_dir(). Note that when unpicklingTextEncoderon another machine, thecache_folderpath needs to be accessible to store the downloaded model.- store_weights_in_pickle

bool, default=False Whether or not to keep the loaded sentence-transformers model in the

TextEncoderwhen pickling.When set to False, the

_estimatorproperty is removed from the object to pickle, which significantly reduces the size of the serialized object. Note that when the serialized object is unpickled on another machine, theTextEncoderwill try to download the sentence-transformer model again from HuggingFace Hub. This process could fail if, for example, the machine doesn’t have internet access. Additionally, if you use weights stored on disk that are not on the HuggingFace Hub (by passing a path tomodel_name), these weights will not be pickled either. Therefore you would need to copy them to the machine where you unpickle theTextEncoder.When set to True, the

_estimatorproperty is included in the serialized object. Users deploying fine-tuned models stored on disk are recommended to use this option. Note that the machine where theTextEncoderis unpickled must have the same device than the machine where it was pickled.

- random_state

int,RandomStateinstance orNone, default=None Used when the PCA dimension reduction mechanism is used, for reproducible results across multiple function calls.

- verbose

bool, default=True Verbose level, controls whether to show a progress bar or not during

transform.

- model_name

- Attributes:

- input_name_

str The name of the fitted column, or “text_enc” if the column has no name.

- pca_sklearn.decomposition.PCA

A fitted PCA to reduce the embedding dimensionality (either PCA or truncation, see the

n_componentsparameter).- n_components_

int The number of dimensions of the embeddings after dimensionality reduction.

- input_name_

See also

MinHashEncoderEncode string columns as a numeric array with the minhash method.

GapEncoderEncode string columns by constructing latent topics.

StringEncoderFast n-gram encoding of string columns.

SimilarityEncoderEncode string columns as a numeric array with n-gram string similarity.

Notes

This class uses a pre-trained model, so calling

fitorfit_transformwill not train or fine-tune the model. Instead, the model is loaded from disk, and a PCA is fitted to reduce the dimension of the language model’s output, ifn_componentsis not None.When PCA is disabled, this class is essentially stateless, with loading the pre-trained model from disk being the only difference between

fit_transformandtransform.Be aware that parallelizing this class (e.g., using

TableVectorizerwithn_jobs> 1) may be computationally expensive. This is because a copy of the pre-trained model is loaded into memory for each thread. Therefore, we recommend you to let the default n_jobs=None (or set to 1) of the TableVectorizer and let pytorch handle parallelism.If memory usage is a concern, check the characteristics of your selected model.

References

[1] (1,2)L. Grinsztajn, M. Kim, E. Oyallon, G. Varoquaux “Vectorizing string entries for data processing on tables: when are larger language models better?”, 2023. https://hal.science/hal-04345931

Examples

>>> import pandas as pd >>> from skrub import TextEncoder

Let’s encode video comments using only 2 embedding dimensions:

>>> enc = TextEncoder( ... model_name='intfloat/e5-small-v2', n_components=2 ... ) >>> X = pd.Series([ ... "The professor snatched a good interview out of the jaws of these questions.", ... "Bookmarking this to watch later.", ... "When you don't know the lyrics of the song except the chorus", ... ], name='video comments')

Fitting does not train the underlying pre-trained deep-learning model, but ensure various checks and enable dimension reduction.

>>> enc.fit_transform(X) video comments_0 video comments_1 0 0.411395 0.096504 1 -0.105210 -0.344567 2 -0.306184 0.248063

Methods

fit(column[, y])Fit the transformer.

fit_transform(column[, y])Fit the TextEncoder from

column.get_feature_names_out([input_features])Return a list of features generated by the transformer.

get_params([deep])Get parameters for this estimator.

set_params(**params)Set the parameters of this estimator.

set_transform_request(*[, column])Configure whether metadata should be requested to be passed to the

transformmethod.transform(column)Transform

columnusing the TextEncoder.- fit(column, y=None, **kwargs)[source]#

Fit the transformer.

This default implementation simply calls

fit_transform()and returnsself.Subclasses should implement

fit_transformandtransform.- Parameters:

- columna pandas or polars

Series Unlike most scikit-learn transformers, single-column transformers transform a single column, not a whole dataframe.

- ycolumn or dataframe

Prediction targets.

- **kwargs

Extra named arguments are passed to

self.fit_transform().

- columna pandas or polars

- Returns:

- self

The fitted transformer.

- fit_transform(column, y=None)[source]#

Fit the TextEncoder from

column.In practice, it loads the pre-trained model from disk and returns the embeddings of the column.

- get_feature_names_out(input_features=None)[source]#

Return a list of features generated by the transformer.

Each feature has format

{input_name}_{n_component}whereinput_nameis the name of the input column, or a default name for the encoder, andn_componentis the idx of the specific feature.

- set_params(**params)[source]#

Set the parameters of this estimator.

The method works on simple estimators as well as on nested objects (such as

Pipeline). The latter have parameters of the form<component>__<parameter>so that it’s possible to update each component of a nested object.- Parameters:

- **params

dict Estimator parameters.

- **params

- Returns:

- selfestimator instance

Estimator instance.

- set_transform_request(*, column='$UNCHANGED$')[source]#

Configure whether metadata should be requested to be passed to the

transformmethod.Note that this method is only relevant when this estimator is used as a sub-estimator within a meta-estimator and metadata routing is enabled with

enable_metadata_routing=True(seesklearn.set_config()). Please check the User Guide on how the routing mechanism works.The options for each parameter are:

True: metadata is requested, and passed totransformif provided. The request is ignored if metadata is not provided.False: metadata is not requested and the meta-estimator will not pass it totransform.None: metadata is not requested, and the meta-estimator will raise an error if the user provides it.str: metadata should be passed to the meta-estimator with this given alias instead of the original name.

The default (

sklearn.utils.metadata_routing.UNCHANGED) retains the existing request. This allows you to change the request for some parameters and not others.Added in version 1.3.

- transform(column)[source]#

Transform

columnusing the TextEncoder.This method uses the embedding model loaded in memory during

fitorfit_transform.- Parameters:

- columnpandas or polars

Seriesof shape (n_samples,) The string column to compute embeddings from.

- columnpandas or polars

- Returns:

- X_outpandas or polars DataFrame of shape (n_samples, n_components)

The embedding representation of the input.

Gallery examples#

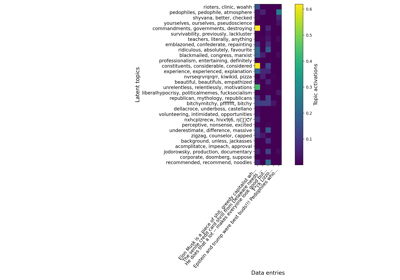

Various string encoders: a sentiment analysis example