fetch_toxicity#

- skrub.datasets.fetch_toxicity(data_home=None)[source]#

Fetch the toxicity dataset (classification) available at skrub-data/skrub-data-files

This is a balanced binary classification use-case, where the single table consists in only two columns: - text: the text of the comment - is_toxic: whether or not the comment is toxic Size on disk: 220KB.

- Parameters:

- data_home: str or path, default=None

The directory where to download and unzip the files.

- Returns:

- bunchsklearn.utils.Bunch

A dictionary-like object with the following keys:

toxicity: pd.DataFrame, the dataframe. Shape: (1000, 2)X: pd.DataFrame, features, i.e. the dataframe without the target labels. Shape: (1000, 1)y: pd.DataFrame, target labels. Shape: (1000, 1)metadata: a dictionary containing the name, description, source and target

Gallery examples#

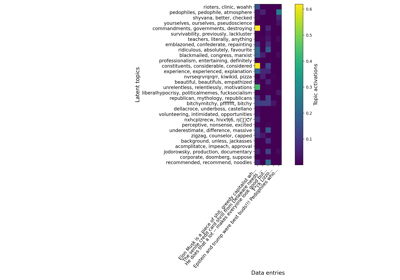

Various string encoders: a sentiment analysis example

Various string encoders: a sentiment analysis example